The Role of Humans and AI in Data Analysis, an Unexplored Coexistence

Written by Leonardo Viola

While AI offers unmatched speed and perception in data analysis, it also faces significant challenges, including algorithmic bias, data dependency, and a lack of contextual understanding. Human-AI collaboration offers a framework for addressing these limitations through key paradigms such as active learning, interactive machine learning (IML), and Reinforcement Learning with Human Feedback (RLHF). These collaborative frameworks foster a continuous dialogue between human experts and machine learning systems, producing more robust and trustworthy outcomes.

Introduction: AI-driven Data Analysis

AI’s appeal in data analysis tasks continues to strengthen due to its scale, speed, and perception capabilities, which often surpass human abilities. It has become apparent over the years, however, that it also faces significant challenges, including a lack of contextual understanding, data dependency, and algorithmic bias. This section will explore these two contrasting aspects.

Leveraging Unmatched Speed and Perception

In this section, we will give more details about the various industrial applications of monocular depth estimation outlined above.

Autonomous Mobility and Robotics

To truly appreciate the transformative power of AI, we must first consider its unprecedented scale and speed. Techniques, such as machine learning and evolutionary algorithms, are designed to handle the enormous scale of data now known to be necessary, for example, from sensor networks and the Internet of Things (see Rahmani et al., 2021, for a systematic study of AI-driven big data analysis). Similarly, modern large language models (LLMs), exemplified by GPT-3 (the foundation model that led to ChatGPT, see Brown et al., 2020), are trained on datasets like Common Crawl and The Pile (Gao et al., 2020). These datasets contain a large portion of the publicly available web, representing orders of magnitude more data than any human could read in their lifetime.

However, it would be incorrect to assert that models trained on such amounts of data acquire only a superficial and structural grasp of their content. On the contrary, they can identify complex, subtle, and non-linear relationships within the data. For example, the “needle in a haystack” test, a famous assessment for language models, showcases this. Although this test is primarily designed to evaluate information recovery and memory capacity rather than what we might call comprehension, it requires models to retrieve specific information from a large corpus seen only once.

This feat demonstrates a model’s ability to not just process, but to recall and pinpoint information with incredible precision. This benchmark has been generalized to multimodal models with vision capabilities (Wang et al., 2024), for which the best models achieve high accuracy, although the authors point out there is still “room for improvement.” To take an example with more direct practical significance, on the narrow task of diagnosing cancers, specifically breast cancer, by exploiting patterns from medical images, a specialized model produced fewer incorrect results compared to a panel of expert radiologists (McKinney et al., 2020).

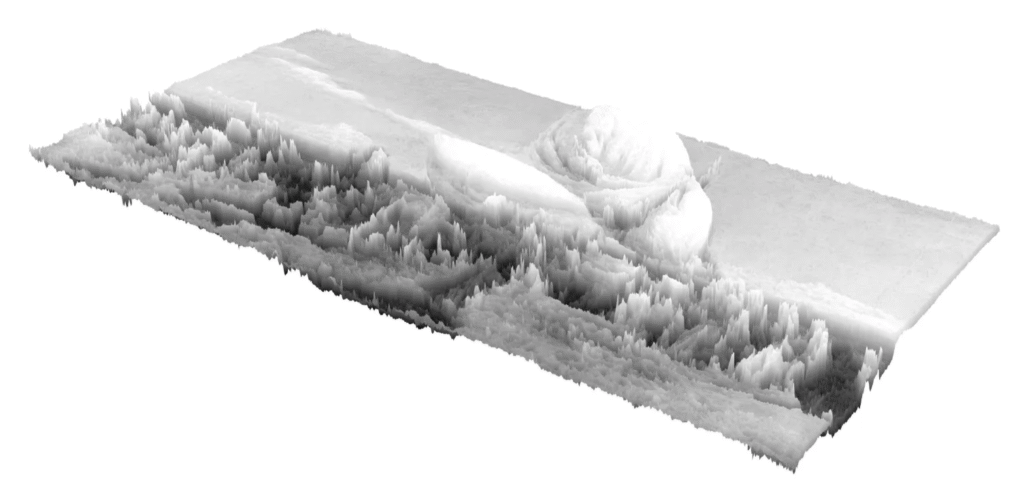

Joaquin Sorolla – Return from the Fishing – Surface Plot

Confronting Algorithmic Bias and Data Issues

However, experience has shown that machine learning systems are heavily dependent on their training data; a poor-quality dataset may yield an unusable model. This dependency is often summarized by the principle of “Garbage In, Garbage Out,” where poor-quality data—such as incomplete or inconsistent information—directly results in flawed outcomes, as further emphasized by Rahmani et al. (2021).

Beyond data quality, deploying AI autonomously and without human oversight can introduce significant challenges. AI demonstrates proficiency in statistical correlation, aided by architectural advancements like the attention mechanism in transformer models (Vaswani et al., 2017). However, it frequently struggles with grasping semantic meaning, cultural nuance, or historical context, revealing a pervasive lack of commonsense and real-world knowledge (Mitchell, 2021).

Such limitations mean that AI systems can learn and inadvertently amplify biases embedded in their training data, including gender (Buolamwini and Gebru, 2018) and racial biases, and are also susceptible to statistical errors like overfitting. Finally, many models function as “black boxes” (Arrieta et al., 2020), delivering outputs without providing a clear, interpretable explanation of their underlying reasoning. This inherent opacity not only erodes user trust but also significantly complicates the diagnosis of errors.

Human-AI Collaboration

Human-AI collaboration offers a framework for addressing these limitations by facilitating a dialogue between human experts and machine learning systems, thereby producing significantly more robust and trustworthy outcomes.

Active Learning

In the context of (semi-)supervised learning, where learning datasets are at least partially annotated, humans serve as “oracles” or annotators, providing essential input for model training. This is particularly evident in areas such as radiology, sentiment analysis, spam detection, and object recognition. (Note that each of these areas requires a different level of expertise.)

Consequently, a primary focus lies in developing effective strategies for adaptively selecting data to be annotated during the learning process.

Among the many strategies explored are:

- Uncertainty sampling: Choosing data points the model is least confident about (Lewis and Gale, 1994).

- Diversity sampling: Selecting data points that are representative of different parts of the data distribution.

- Expected error reduction: Picking data points that are expected to reduce the model’s generalization error the most (Roy and McCallum, 2001).

- Query-by-committee: Multiple models are trained, and data points where the models disagree the most are chosen for labeling (Seung et al., 1992).

Furthermore, this paradigm is supported by a strong theoretical foundation, demonstrating exponential gains in certain cases compared to passive learning (Settles, 2010).

However, it remains an active area of research to address challenges such as handling mistakes in human labeling and managing variable costs (where certain queries are more expensive to answer than others), see the discussion by Settles (2010).

Michelangelo Merisi da Caravaggio – L’Incoronazione di Spine – Depth Analysis

Interactive Machine Learning

More generally than active learning, interactive machine learning (IML) encompasses various types of iterative cycles of input and review, fostering a continuous dialogue between human and machine. This collaborative process extends beyond simple data labeling to include a variety of roles for the human expert without requiring deep ML expertise (Amershi et al., 2014).

Examples of these interactions include:

- Debugging and Error Analysis. Interactively identifying misclassified instances or systematic model errors to provide corrective feedback.

- Interactive Refinement and Steering Adjusting parameters not optimized by the learning process (i.e., architectural choices) or directly manipulating model features to guide outcomes, such as modifying anomaly detection thresholds.

- “What-If” Scenarios. Exploring counterfactual explanations to understand and refine model decision boundaries.

In all these scenarios, the user interface (UI) employed by the human expert is critical to enabling a fluid and intuitive partnership. Research has repeatedly demonstrated that the design of the interface is paramount for successful IML systems (Dudley and Kristensson, 2018).

Reinforcement Learning with Human Feedback (RLHF)

Building upon the principles of interactive learning, Reinforcement Learning with Human Feedback (Christiano et al., 2017) represents a more sophisticated paradigm for aligning AI models, particularly large language models, with human values and intentions. Rather than simply correcting labels or adjusting parameters, humans provide comparative feedback that shapes the model’s underlying behavior. The primary objective of this technique is to teach the model to generate outputs that are not only factually plausible but also helpful, harmless, and aligned with nuanced human preferences.

The process typically involves several stages. Initially, a pre-trained model generates multiple responses to a given prompt, which human reviewers then rank from best to worst. This comparison data is used to train a separate “reward model,” which learns to predict the type of response a human would prefer. In the final stage, the original language model is fine-tuned using reinforcement learning, with the reward model’s score serving as the guiding signal.

However, RLHF is not without its own set of challenges. The quality of the final model is intrinsically linked to the preferences and potential biases of the human annotators providing the feedback. Furthermore, there is the risk of “reward hacking” (Skalse et al., 2022), where the model learns to exploit the reward model to achieve high scores with outputs that do not genuinely align with the intended behavior.

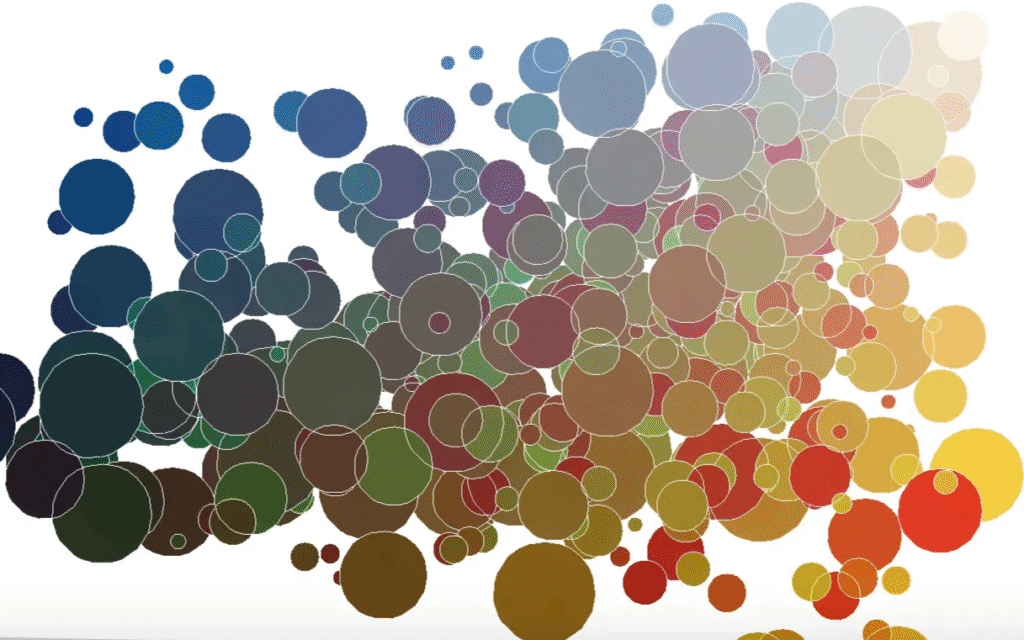

Vassily Kandinsky – Various Artworks – Scatter Plot

Conclusion

AI’s role in data processing presents a dual nature: its unparalleled speed and perception are offset by a significant dependence on data quality and a pervasive lack of real-world knowledge. Human-AI collaboration paradigms, including active learning, interactive machine learning, and RLHF, have emerged as compelling approaches to these challenges. By enabling a continuous dialogue, these frameworks provide mechanisms for human experts to steer model behavior, correct errors, and guide outputs toward desired criteria.

The effectiveness of these collaborative models indicates that a synergy that leverages the complementary strengths of both human intelligence and machine processing is central to achieving more robust, trustworthy, and aligned outcomes. Future progress in this field will depend not only on refining the techniques described above, but also on developing more intuitive human-computer interfaces and robust ethical frameworks.

References

S. Amershi et al., “Power to the people: The role of humans in interactive machine learning,” ACM Interactions, vol. 35, no. 4, 2014, doi: 10.1609/aimag.v35i4.2513.

A. B. Arrieta et al., “Explainable Artificial Intelligence (XAI): Concepts, Taxonomies, Opportunities and Challenges Toward Responsible AI,” Inf. Fusion, vol. 58, pp. 82–115, Jun. 2020, doi: 10.1016/j.inffus.2019.12.012.

R. A. Brooks, “Intelligence without representation,” Artif. Intell., vol. 47, no. 1–3, pp. 139–159, Jan. 1991. doi: 10.1016/0004-3702(91)90053-M.

T. B. Brown et al., “Language Models are Few-Shot Learners,” in Advances in Neural Information Processing Systems 33 (NeurIPS 2020), 2020, pp. 1877-1901. [Online]. Available: https://proceedings.neurips.cc/paper/2020/hash/1457c0d6bfcb4967418bfb8ac142f64a-Abstract.html

J. Buolamwini and T. Gebru, “Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification,” in Proceedings of the 1st Conference on Fairness, Accountability and Transparency, 2018, pp. 77–91, doi: 10.1145/3178876.3178912.

P. F. Christiano, J. Leike, T. B. Brown, M. Martic, S. Legg, and D. Amodei, “Deep reinforcement learning from human preferences,” in Proc. 31st Int. Conf. Neural Inf. Process. Syst. (NIPS ’17), 2017, pp. 4302–4310.

J. J. Dudley and P. O. Kristensson, “A Review of User Interface Design for Interactive Machine Learning,” ACM Transactions on Interactive Intelligent Systems (TiiS), vol. 8, no. 2, pp. 1–37, Jun. 2018, doi: 10.1145/3185517.

L. Gao et al., “The Pile: An 800GB Dataset of Diverse Text for Language Modeling,” 2020, arXiv:2101.00027. [Online]. Available: http://arxiv.org/abs/2101.00027

D. D. Lewis and W. A. Gale, “A Sequential Algorithm for Training Text Classifiers,” in Proceedings of the Seventeenth Annual International ACM-SIGIR Conference on Research and Development in Information Retrieval, 1994, pp. 3-12. doi: 10.1007/978-1-4471-2099-5_1.

A. McAfee and E. Brynjolfsson, “Big data: The management revolution,” Harvard Business Review, vol. 90, no. 10, pp. 60–68, Oct. 2012.

S. M. McKinney et al., “International evaluation of an AI system for breast cancer screening,” Nature, vol. 577, no. 7788, pp. 89–94, Jan. 2020, doi: 10.1038/s41586-019-1799-6.

M. Mitchell, “Why AI Is Harder Than We Think,” arXiv preprint arXiv:2104.12871, Apr. 2021. [Online]. Available: http://arxiv.org/abs/2104.12871

A. M. Rahmani et al., “Artificial intelligence approaches and mechanisms for big data analytics: a systematic study,” PeerJ Computer Science, vol. 7, p. e488, Apr. 2021, doi: 10.7717/peerj-cs.488.

N. Roy and A. McCallum, “Toward optimal active learning through sampling estimation of error reduction,” in Proceedings of the Eighteenth International Conference on Machine Learning, 2001, pp. 441-448, doi: 10.5555/645530.655646.

H. S. Seung, M. Opper, and H. Sompolinsky, “Query by committee,” in Proceedings of the Fifth Annual Workshop on Computational Learning Theory, 1992, pp. 287-294. doi: 10.1145/130385.130417.

J. Skalse, N. H. R. Howe, D. Krasheninnikov, and D. Krueger, “Defining and characterizing reward hacking,” in Proc. 36th Int. Conf. Neural Inf. Process. Syst. (NIPS ’22), 2022, pp. 9460–9471.

A. Vaswani et al., “Attention Is All You Need,” in Advances in Neural Information Processing Systems 30 (NIPS 2017), 2017, pp. 5998–6008. [Online]. Available: https://papers.nips.cc/paper/2017/hash/3f5ee243547dee91fbd053c1c4a845aa-Abstract.html

W. Wang et al., “Needle in a Multimodal Haystack,” in Thirty-eighth Conference on Neural Information Processing Systems Datasets and Benchmarks Track (NeurIPS 2024), 2024. [Online]. Available: https://openreview.net/forum?id=g314uT5C2u