Depth Estimation for Artwork Analysis: a QuantumSpace Technological Solution

Written by Davide Chieregato

Three-dimensional data greatly enhances capabilities in numerous applications, yet direct capture requires specialised hardware while often only a two-dimensional image is available or practical to obtain. Monocular depth estimation overcomes this limitation, using deep neural networks to recover structural details from ordinary images. Applied to artwork analysis, the process offers a non-invasive, scalable method to analyze compositional depth, identify physical deformations, and support the digital preservation of art in virtual platforms and archives.

Introduction

In numerous industrial applications, such as autonomous mobility and robotics, consumer and interactive media, specialised professional and scientific tools, and in our case, artwork analysis, three-dimensional data, i.e., the relative depth of objects, significantly enhances capabilities. This is especially true for cultural heritage, where the spatial composition, from the arrangement of figures in a landscape to the use of perspective and shading hold invaluable information for conservators, historians, and digital archivists. The same can be said of the physical topography of the artwork, from the texture of impasto to the subtle warping of a canvas. However, direct capture of this data requires specific-purpose instrumentation like LiDAR (Light Detection and Ranging), stereo cameras, or structured light, while often only a two-dimensional image is available.

Extensive work has been done in the literature to overcome this limitation and recover structural details from ordinary (two-dimensional) images, a task known as monocular depth estimation. This is most often achieved using machine learning techniques, particularly deep neural networks trained on a vast corpus of data where depth data is already available. Essentially, while depth is not directly contained in a given image, it is present in similar images within the dataset and, as a result, becomes encoded within the trained neural network. This information can then be extracted by running the network on the input.

However, these machine learning techniques are not merely a fallback; they offer distinct advantages over specialised hardware. These include being non-invasive (which is especially important for artworks, as we will discuss later), scalable, and comparatively cheaper.

Michelangelo Merisi da Caravaggio – L’Incoronazione di Spine – Depth Analysis

Michelangelo Merisi da Caravaggio – L’Incoronazione di Spine – Depth Analysis

Industrial Applications

In this section, we will give more details about the various industrial applications of monocular depth estimation outlined above.

Autonomous Mobility and Robotics

First of all, for self-driving vehicles, safety crucially depends on a comprehensive understanding of their environment, including road geometry, pedestrians, and obstacles, which itself relies on depth estimation. However, to reduce costs and thereby enhance accessibility, many systems including Tesla’s Autopilot (vision-first) and Mobileye utilise vehicle-mounted cameras, which offer a more affordable navigation solution than laser-based techniques such as LiDAR.

Similarly, when designing warehouse robotics and autonomous drones, such as delivery drones or agricultural inspection platforms, navigation and object manipulation tasks are better performed with depth information. While purpose-built sensors increase payload and power demands, monocular depth estimation is often preferred for compact or airborne systems, as it allows for reduced size and power consumption.

Consumer and Interactive Media

An entirely different area where three-dimensional data, extracted from two-dimensional images (often from a smartphone camera, without dedicated depth sensors), is applied is to improve the user experience of a product or service.

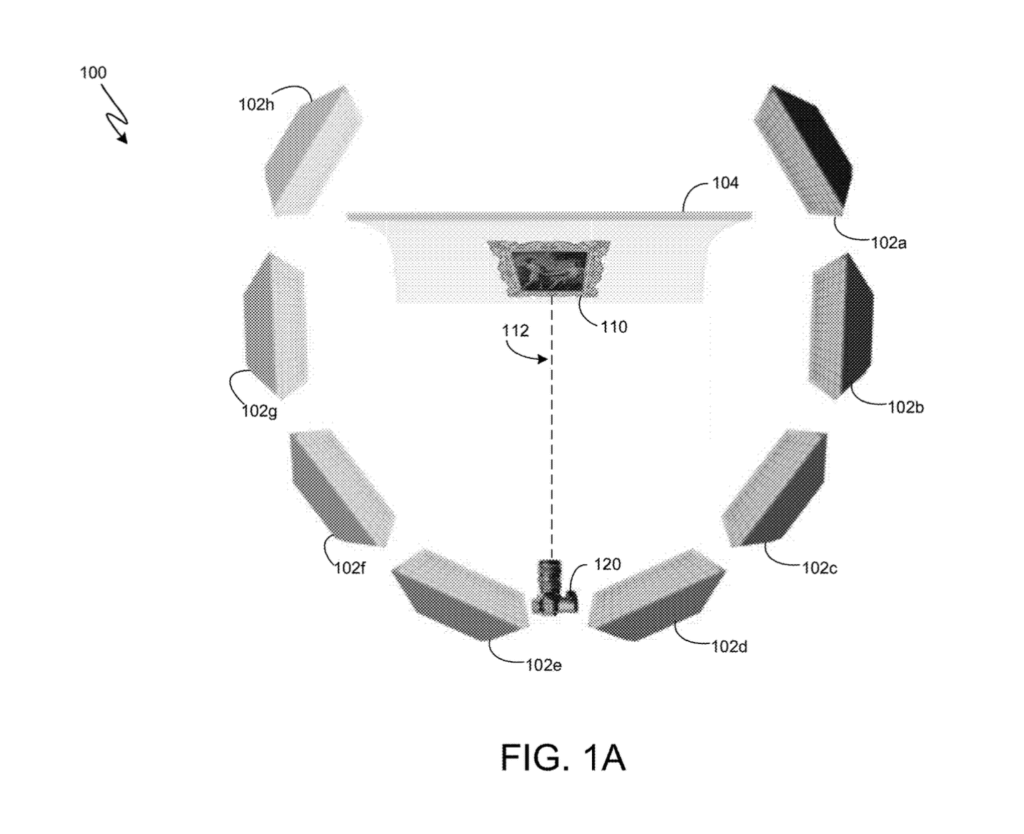

First, the ability to place 3D virtual objects into real scenes opens the door to interactive AR experiences, such as Snapchat Lenses and Instagram AR filters. It also enables new retail experiences that improve customer satisfaction and reduce return rates by showing realistic previews. For example, IKEA Place lets users place virtual furniture in their homes, while services like Warby Parker’s virtual try-on estimate face shape to simulate how eyewear would fit.

In addition, in the context of smart home and surveillance technology, representing a scene in 3D space rather than 2D space means better spatial awareness. This enables more accurate detection of people or objects and leads to improved indoor fall detection pet monitoring, and AI-based home automation with a reduced number of alerts or mistakes.

Specialised Professional and Scientific Tools

Finally, we will discuss applications of depth estimation that are not for general use, but rather for professional and scientific tools. This applies to any specialist who is used to working with two-dimensional images as proxies for spatial objects, although we will limit ourselves to two examples. Dermatologists and wound care physicians measure lesion depth or swelling using photos, especially in remote settings, such as telemedicine. This is also true for graphic designers, for example in the game industry, who create 3D scenes or textures manually from reference images using volumetric reconstruction pipelines implemented in Adobe tools and Unity/Unreal plugins.

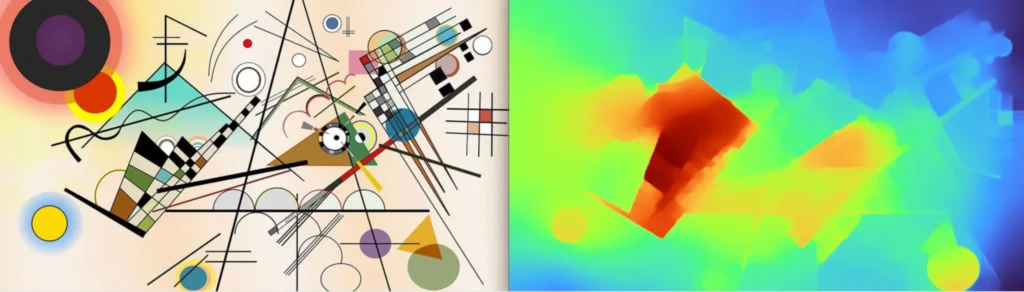

Vassily Kandiskij – Composition VIII – Depth Analysis

Vassily Kandiskij – Composition VIII – Depth Analysis

Application of Artwork Analysis

We will now turn our attention to a more recent application of depth map inference: its role within an artwork analysis pipeline.

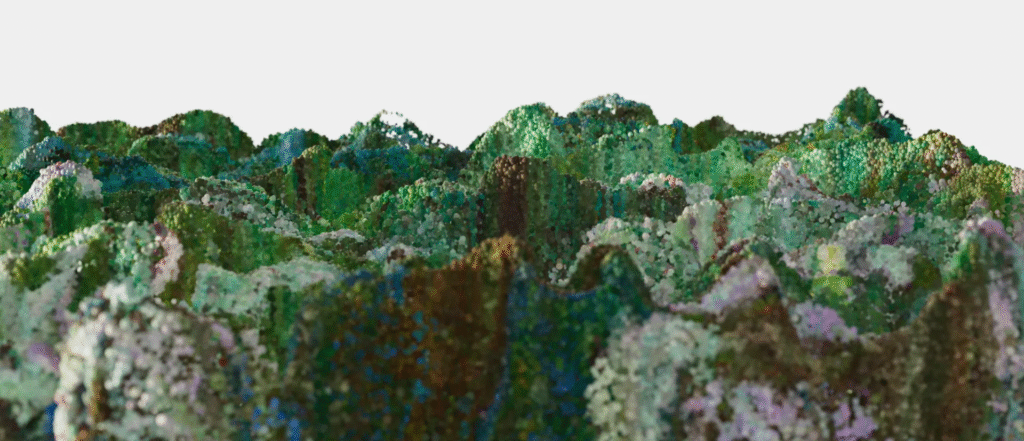

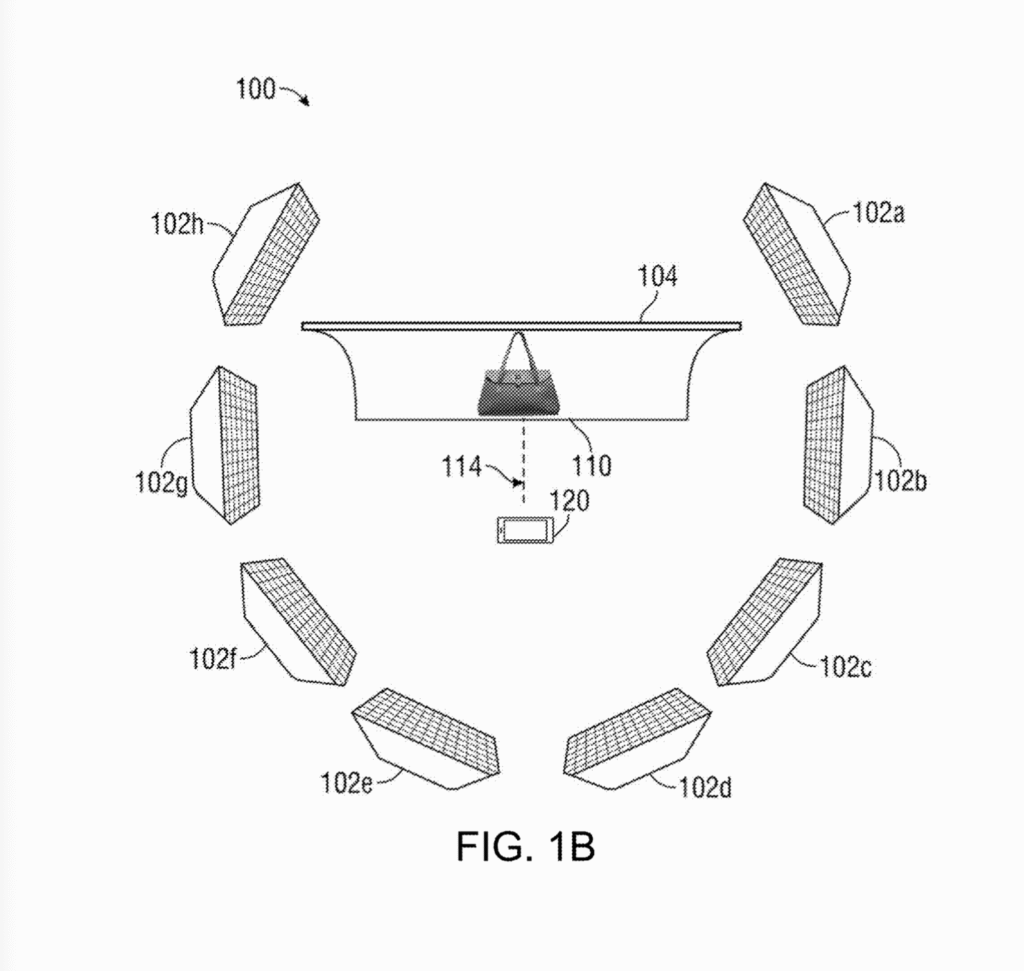

Indeed, when it comes to identifying, digitally preserving, or displaying a piece of art in virtual museum platforms or archives, the implied depth of the depicted scene as well as variations in shape or thickness, or deformations of the canvas, is just as important as color or contrast directly inferable from an RGB image. This information allows for the generation of high-fidelity 3D mesh models of the depicted scene suitable for interactive viewing, allowing audiences to appreciate the spatial arrangement and the physicality of the artwork in a way that flat photographs cannot capture. Similarly, identifying warping, canvas slackening, pressure points, or other physical deformations, including outward bulges or inward recessions, is crucial when assessing damage between two digitalizations. The analysis produces a topographical heatmap, where these subtle structural issues are rendered as clear visual anomalies for conservators.

However, in many contexts, even when cost is not a factor, it is preferable to avoid non-standard equipment and instead use indirect depth estimation derived from standard (and potentially pre-existing) high-resolution RGB 2D images. For example, in cultural heritage contexts, minimizing contact and maximizing portability are essential.

From a technical standpoint, on a single, high-resolution 2D image of an artwork (which can be a painting, sculpture, or framed piece), we run a pretrained neural network model using the PyTorch library, which is optimised to run on a GPU when available. This state-of-the-art model infers depth information from subtle cues like shading and texture alone. This results in a fully automatic process that is practical, portable, and scalable.

Giuseppe Pelizza da Volpedo – Il Quarto Stato – Depth Analysis

Giuseppe Pelizza da Volpedo – Il Quarto Stato – Depth Analysis

Conclusion

In conclusion, monocular depth estimation, powered by deep neural networks, overcomes the limitations of specialised hardware by recovering structural details from ordinary images. While valuable in numerous industrial applications, its use in artwork analysis is especially compelling. The process offers a non-invasive, scalable method to identify physical deformations and support the digital preservation of art. By generating high-fidelity 3D models and topographical heatmaps from standard 2D images, this technique provides invaluable information to conservators and historians.