Socio-Psychological Cases for Human-AI Collaboration in Data Analysis

Written by Francesco Rocchi

The accelerating development of artificial intelligence has revolutionized data analysis in various industries, but basic limitations remain in AI’s capacity to seize complex psychological and contextual factors essential for specialized applications. This article discusses the new paradigm of “hybrid intelligence” – here the combination of human psychological insight with AI computational capability – from the perspective of socio-psychological theory and practical application. Based on current research in human-computer interaction, cognitive science, and domain-specific AI applications, we discuss how human supervision can compensate for AI’s intrinsic blind spots while exploiting its computational strengths.

The article introduces Quantum Space’s “AI 1.5” methodology as a case study in weighted data analysis, in which human psychological expertise orchestrates AI processing to identify insights that purely automated systems cannot. Through analysis of current AI limitations in pattern recognition, contextual comprehension, and psychological profiling, we illustrate how human-AI collaboration reflects a necessary development beyond conventional binary options between human expertise and machine learning. The research shows that fields demanding psychological interpretation , especially art analysis, cultural studies, and behavioral evaluation – are significantly improved by hybrid models consolidating AI processing power with human comprehension of psychological subtlety.

Our results indicate that the way forward in specialized data analysis is not to substitute human insight with artificial intelligence, but rather to forge intelligent partnerships enhancing both human psychological comprehension and machine computational capability. The hybrid approach provides a template for overcoming AI’s present limitations while optimizing its potential for complicated, psychologically-informed analysis across varied applications.

Introduction: The Limitations of Pure AI in Psychological Analysis

Much of the current debate in artificial intelligence sets up a false dichotomy: human expertise vs. machine learning, intuition vs. data, subjective interpretation vs. objective analysis. This binary construction fails to appreciate the capabilities and limitations of today’s AI systems, especially in fields that demand psychological insight and contextual understanding.

Recent developments in machine learning have achieved unprecedented success in pattern recognition, data manipulation and predictive analytics. But they also obscure the large shortcomings of AI systems when faced with tasks that involve psychological interpretation, cultural context or emotional nuance. Research by Malone, Almaatouq, and Vaccaro at MIT found that human-AI teams performed better than humans working alone in many tasks, but surprisingly did not surpass AI systems operating independently in decision-making scenarios (Malone et al., 2024). This indicates that simply blending humans and machines does not guarantee better outcomes without also considering their respective strengths.

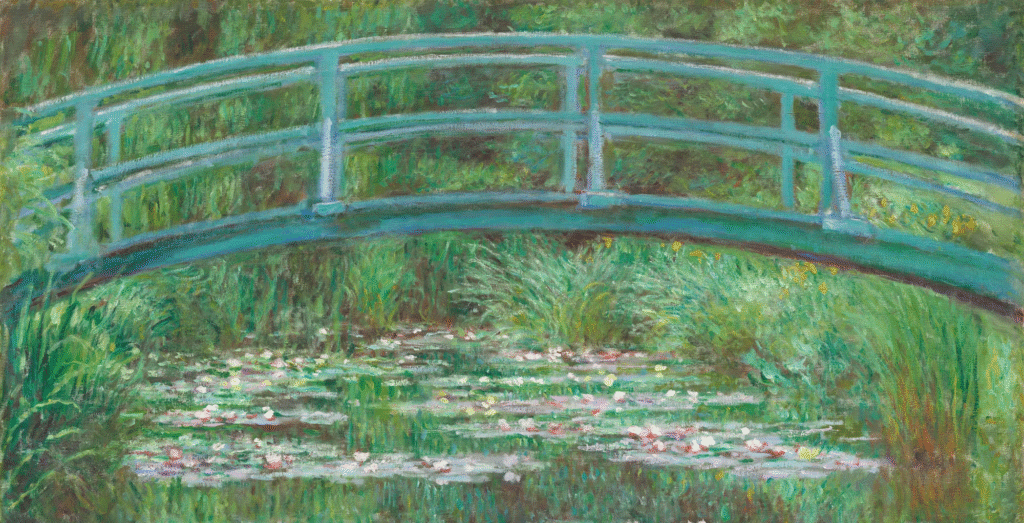

The art world is particularly bad at this. While AI can identify stylistic patterns, color distributions, and compositional elements with remarkable precision, it consistently fails to interpret the psychological motivations behind artistic choices, the emotional context of creative decisions, or the cultural significance of symbolic elements. As Chatterjee in his analysis of AI’s role in art, people experience “a connection between the creator and receiver transmitted through the object, which lends authenticity to the object” – something that AI systems cannot replicate or fully understand (Chatterjee, 2022).

This discrepancy between computational power and understanding of the psychological nature has serious consequences across sectors relying on a nuanced interpretation. Rather than see this as a barrier, however, new research is pointing a way forward that isn’t a stark choice between humans on one hand and machines on the other, but one where the two work together in complex, interdependent ways.

Theoretical Foundations: Socio-Psychological Perspectives on Human-Machine Collaboration

The theoretical basis of the human-AI collaboration is interdisciplinary, combining theories of cognitive science, social psychology, and human–computer interaction. Carroll’s foundational work on human-computer interaction established that “HCI continues to provide a challenging test domain for applying and developing psychological and social theory in the context of technology development and use” (Carroll, 1997).

In this context AI is a cognitive amplifier, not a cognitive substitute. Machines can deal with more computing tasks than a human could, but the contextual interpretive work, the psychological understanding, the cultural knowledge, all that still eludes AI. This symbiosis results in what scientists call “augmented intelligence”, a partnership between human and machine that outperforms what can be done by humans or machines alone.

Social psychology research further supports this collaborative approach by demonstrating how human cognitive biases can be mitigated through structured interaction with objective data analysis. Kahneman’s extensive work on cognitive biases reveals that humans excel at pattern recognition and contextual interpretation but struggle with statistical analysis and large-scale data processing (Kahneman, 2011). His dual-system theory casts “System 1” thinking as fast and intuitive but prone to bias, while “System 2” thinking is slower and more purposeful and analytical. AI systems can complement the human System 2 processing well as humans provide the intuition and context that AI currently lacks.

The new area of “socio-technical systems” also offers theoretical support for collaboration between machines and humans. Instead of technology as something neutral, this approach seeks ways in which systems are most effective when human social processes are combined closely with the technological elements. Research in cognitive neuroscience suggests that while AI techniques seem inspired by advances in understanding brain function, “the primary pursuits between cognitive neuroscience and AI are distinct” (Chen and Yadollahpour, 2024).

Current State of AI Data Analysis: Capabilities and Blind Spots

Modern AI systems seem to show an amazing ability in some analytic areas but exhibit very disturbing blind spots in other regards. Broad language models can process huge quantities of text and identify statistical patterns invisible to human analysis, yet they consistently fail to understand contextual nuance, cultural context, or emotional subtext.

While computer vision models can now recognize objects, faces, and even styles of fine art with superhuman precision, they’re not able to parse the psychological causes of artistic choices, or grasp the cultural significance of symbolic details. Research has shown that while people almost always report feeling emotions when viewing art, they consistently rate human-created art more highly than AI-generated work when they know the source, suggesting that psychological connection to human creators remains fundamental to artistic appreciation (Bellaiche et al., 2023).

This tendency transcends all areas necessitating psychologistic interpretation. In medical diagnosis, A.I. systems are good at recognizing pathological patterns in imaging data but are unable to comprehend patient psychology, cultural considerations or the social context of illness. And when it comes to financial analysis, while AI can digest market data at a pace and precision far beyond that of any human being, it cannot read the psychological drivers of the market nor can it factor in cultural nuance in economic decisions.

The limitations become particularly pronounced in cross-cultural analysis. AI systems trained primarily on Western datasets consistently misinterpret cultural symbols, psychological expressions, and social contexts from non-Western cultures. Wang and colleagues noted that current AI development “cannot well simulate the subjective emotional and mental state changes of human beings” because “this replication of the biology of the human brain cannot well simulate the subjective psychological changes” (Wang et al., 2022).

And these blind spots are not just some technical limitation to be fixed with more-cautious algorithms or even a bigger dataset. They outline the basic disparity between computational computation and human mental understanding. Artificial intelligence models are statistical, learning patterns based on frequency and correlation.

Human psychological understanding operates through empathy, cultural knowledge, and contextual interpretation – capabilities that emerge from lived experience and social interaction rather than data processing.

The Hybrid Intelligence Paradigm: Beyond Binary Human vs. Machine Approaches

Understanding the psychological limitations of the AI has led to the development of hybrid intelligence systems, combining human intuition with machine capabilities. In contrast to conventional human-computer interfaces (HCIs), these systems regard human and artificial intelligence as linked parts of an integrated analytical system.

Studies have found key principles of collaborating between human-AI: comparative compatibility, clear articulation, and gradual learning (Gomez et al. 2025). Complementary strengths involve designing systems where humans and AI handle tasks aligned with their respective capabilities. Transparent communication ensures that humans understand AI reasoning while AI systems can incorporate human feedback. Adaptive learning allows both human and machine components to improve through interaction.

Recent research out of MIT seems to indicate that the best opportunities for human-AI collaboration might have more to do with creative tasks than analytical ones. “While AI in recent years has mostly been used to support decision-making by analyzing large amounts of data, some of the most promising opportunities for human-AI combinations now are in supporting the creation of new content” (Malone et al., 2024). This observation may be particularly important for tasks such as art evaluation where creative interpretation and psychological insight are necessary.

Applications of hybrid intelligence are present in a variety of industries. In medicine, diagnosis systems that put together AI’s capabilities in pattern recognition and a physician’s expertise have been reported obtaining accuracies higher than any of them used individually. Research has shown that “explainable AI supports domain experts in making more accurate visual inspection decisions, allowing them to validate AI predictions against their own domain knowledge” (Senoner et al., 2024).

The art world has been slower to embrace hybrid methods, in part because of its traditional reluctance to embrace technology, in part because of the difficulties involved in blending AI capabilities with art historical expertise. Research has shown that “explainable AI supports domain experts in making more accurate visual inspection decisions, allowing them to validate AI predictions against their own domain knowledge” (Senoner et al., 2024).

Psychological Elements Lost in Automated Analysis: What AI Cannot See

The psychological dimensions that AI systems consistently fail to capture are precisely those that provide the deepest insights into human behavior, cultural meaning, and creative expression. These elements include emotional context, cultural symbolism, psychological motivation, and the subtle interpersonal dynamics that influence creative output.

Emotional context is arguably the biggest unsolved riddle in AI studies today. Although AI systems can detect emotional expressions via facial recognition or determine emotional content through text analysis, they cannot comprehend the psychological states that form the basis of emotional expressions or interpret the cultural differences on which expressions of emotion depend. Research shows that technology acceptance depends heavily on perceived usefulness and ease of use, but also on emotional and psychological factors that AI systems struggle to assess (Davis, 1989).

Another (more philosophical) challenge for AI to address is the issue of cultural symbolism. Symbols are imbued with meaning that springs from cultural heritage, social situation, and common memory, things that you can’t extract from statistical analysis of visual forms. Studies of human preferences for human-created versus AI-created art reveal that “people value art through multiple, co-occurring processes, with creator labels influencing people’s evaluations of art” regardless of the actual quality differences (Bellaiche et al., 2023).

Motivation, the deepest drives, conflicts, and desires that colour a sense of life – is still far, far beyond today’s AI. Knowing why a painter chose one palette, composition, or subject over another takes a kind of understanding of human nature that stems from empathy and psychology rather than mere pattern matching. This restriction is highly critical in the area of psychological profiling where comprehension of motivation is important in pinpointing accurate analysis profiles.

The social dynamic behind creative work is another dimension that AI analysis cannot see. Artists’ practices are formed in relations, social and cultural contexts that inform their production and exceed the interpretation of an individual piece of work. These influences are detectable for humans who receive additional information through historical context, personal life or cultural experience, while for AIs they are merely a set of visual shapes.

The “AI 1.5” Model: Weighted Human Supervision in Practice

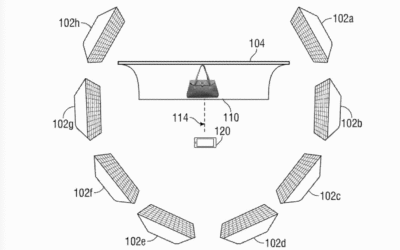

QuantumSpace’s “AI 1.5” approach represents a practical implementation of hybrid intelligence principles specifically designed to address the psychological blind spots in automated analysis. Rather than treating human expertise and AI capabilities as competing approaches, this model creates a weighted system where human psychological insight guides AI processing to extract information that purely automated systems would miss.

This architecture is informed by the underlying strengths and distractions of human and computer intelligence. The computational tasks that go beyond human ability are delegated to AI components: crunching millions of data points, recognizing subtle visual patterns, ensuring regularity throughout large datasets. Human participants contribute psychological interpretation, cultural context and the sort of fine-grained understanding that comes from being human and having related professional expertise.

The “weighted” part of this method comes from the systematization of human judgement used in the processing of AI. Instead of just having humans check AI findings, the system uses human judgment at various stages of analysis. Psychologists and domain experts contribute to the training of AI models, interpretation of data, and as a point of reference for how AI systems interpret and organize knowledge.

This method has proved especially beneficial for art analysis, in which psychological interpretation is important for the understanding of creative works. Traditional AI approaches can tell you that an artist used a lot of blue during a specific time period, but they can’t explain why. Was it because of his or her state of mind, the artist’s response to culture, or aesthetic experimentation? The AI 1.5 model combines AI’s ability to identify color patterns with human expertise in psychological interpretation to provide insights that neither approach could achieve independently.

Training AI 1.5 systems is fundamentally distinct from traditional machine learning. Rather than feed AI systems data sets and hope they discern relevant patterns, human experts offer psychological frameworks that AI systems can use to learn. This hard supervision helps AI to learn cognitive abilities in line with what humans can do (rather than statistical patterns).

Case Study Applications: Art Analysis and Psychological Profiling

The practical application of hybrid intelligence principles in art analysis demonstrates both the potential and the complexity of human-AI collaboration. Quantum Space’s work in this domain illustrates how psychological insight can transform technological analysis from simple pattern recognition into sophisticated interpretation of creative expression.

There are two broad categories of traditional art analysis methods, the human expert-based and the computational-based ones, both with severe limitations. Experts have a wealth of psychological intuition and cultural understanding but are prone to error in their analysis on a large scale, and they are not immune to bias. Computers can crunch huge amounts of visual data and spot subtle patterns, but they are unable to read psychological meaning or cultural significance.

Such capabilities can be integrated systematically using the hybrid approach. AI reads visual parameters of artworks, color distributions, stroke patterns, compositional architectures, with an unparalleled precision and consistency. Such patterns can be given meaning by human experts in a psychological interpretation. The result is analysis that straddles the objective technical and the subjective psychological poles around which the cultural phenomenon we call art is structured.

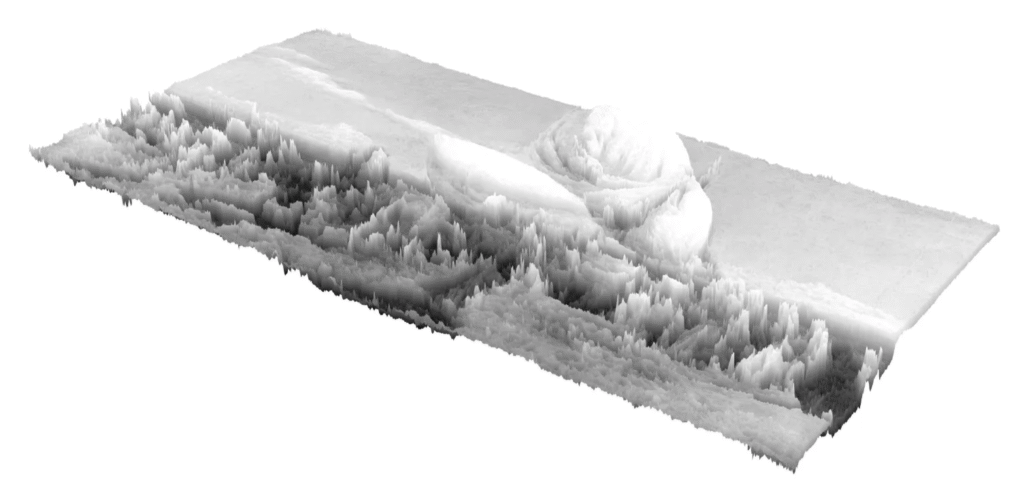

Psychological profiling through artwork analysis represents a particularly sophisticated application of this hybrid approach. The process begins with AI systems extracting detailed technical data from artworks: color choices, brushstroke characteristics, compositional patterns, and stylistic elements. This data provides objective information about an artist’s technical choices and creative patterns across multiple works.

Psychological professionals read this data against academic psychology and cultural lore. The use of colour may also reveal emotional or psychological aspects of an individual’s character or the influence of a particular culture. Brushstroke patterns could reflect personality, strategy and psychological state. Compositional details might have signalled any sort of psychological pre-occupation, cultural backdrop and personal relationships.

By combining AI precision with human psychological understanding, we can get insights that are simply impossible if both methodologies are used separately. Recent works have indeed managed to retrieve psychological patterns in artists’ production as related to biography, cultural movements, and historical facts. This approach has been particularly fruitful in the case of artists whose life histories are scant, and where the analysis of work offers the central resource for psychological inference.

Addressing Statistical Errors Through Human-Guided AI Training

There are many benefits of hybrid intelligence systems, including the subjective nature of the performance and the capability to fix statistical errors that are not easily identified in fully automated analysis. AAI systems are prone to overfitting, where they identify spurious correlations in training data that do not reflect meaningful patterns. Human guidance during the training process can prevent these errors while directing AI attention toward psychologically meaningful patterns.

99% of AI analysis errors can be attributed to misunderstanding the concept of correlation vs causation. AI systems excel at identifying correlations but cannot understand causal relationships without human guidance. In art analysis, an AI system might identify that certain color combinations appear frequently in an artist’s work during specific periods, but it cannot determine whether these patterns reflect psychological states, material constraints, or cultural influences without human interpretation.

Human-guided training addresses these limitations by incorporating psychological theory and domain expertise into AI learning processes. Rather than letting AI systems uncover any statistical patterns in data, human experts supply a theoretical framework that steers pattern identification toward psychologically significant relationships. This method greatly reduces the false positives rate and offers AI systems a better chance of finding patterns which are actually significant.

Training is an iterative process between human experts and AI systems. Psychologists and subject matter experts audit AI results to recognize correct insights and correct errors or misdirections. These inputs are processed over subsequent training rounds, incrementally enhancing AI performance on tasks with psychological complexity. Research on technology acceptance shows that this guided approach can improve user satisfaction and system effectiveness compared to purely automated systems (Davis, 1989).

Custom training for specialized tasks is another pro of hybrid. Generic AI models are trained and deployed on general datasets that do not resemble the exact needs of application domains. The hybrid systems can be trained to serve as a specialist system and can be trained for specific purposes;for example; art historical analysis, psychological assessment, cultural interpretation – with human experts providing domain-specific guidance throughout the training process.

Socio-Psychological Implications of Human-AI Collaboration

The wider psycho-social implications of human-AI interaction go beyond that of the technical to the very nature of intelligence, expertise and the relationship of human to machine. The development of hybrid intelligence systems reflects and shapes evolving cultural attitudes toward technology, human capabilities, and the distribution of cognitive labor in society.

From a social psychology perspective, hybrid intelligence systems challenge traditional boundaries between human and artificial intelligence while preserving distinctly human capabilities. This alternative to the human/machine dichotomy, in which humans and machines are not placed in opposition, but are viewed as two facets of complex cognitive systems, is given by such an approach. This framing has significant implications for how society perceives technology evolution and human displacement fears.

Literature on technology acceptance theory has proposed that partially automated solutions might receive less pushback, than purely automated systems because they preserve meaningful roles for human expertise. Studies show that perceived usefulness is the strongest predictor of attitudes toward AI usage, but users also value systems that enhance rather than replace human capabilities (Schade et al., 2024). This tendency is particularly evident in areas involving psychological insight, where practitioners are aware that purely automated analysis has its shortcomings.

The cognitive science ramifications of hybrid intelligence systems are no less compelling. By delegating most (but not all) computational work to AI while leaving some roles for human interpretation and judgment, such systems may supplement, not replace, human cognitive processes. Kahneman’s research on dual-system thinking suggests that humans can make better decisions when they can rely on external support for System 2 processing while maintaining their System 1 intuitive abilities (Kahneman, 2011).

Yet hybrid systems also pose key issues of skill erasure and expertise retention. As AI systems take on more and more complex analysis, how do humans learn and maintain the expertise required for impactful collaboration? Studies indicate that successful hybrid systems are those where efforts are made to retain and advance human capabilities (where human insight remains important).

The cultural stakes of hybrid intelligence lead to questions about creativity, interpretation, and semiosis. In areas like art analysis, the combination of AI with human intuition causes us to ponder the question of aesthetics and culture’s interpretation. While hybrid systems can identify patterns and provide objective analysis, the meaning and significance of artistic expression ultimately depend on human cultural understanding and emotional response.

Future Applications: Expanding Hybrid Intelligence Across Sectors

The principles illustrated in art analysis applications are widely applicable to any realm that combines computational strength and psychological insight. Healthcare, education, financial services and social services are all opportunities for hybrid intelligence systems that meld the power of AI with human skill in psychological and cultural interpretation.

The healthcare application of hybrid intelligence seems to be most promising due to the significance of psychological factors in medical diagnosis and treatment. Artificial intelligence systems do extraordinarily well at processing medical imaging data and finding patterns associated with pathology, but they don’t know anything about psychology, how culture influences health behavior, or the social context of illness. Research shows that human-computer interaction in healthcare benefits significantly from systems that preserve roles for human expertise while leveraging AI capabilities (Olson and Olson, 2003).

Teaching applications are another likely field of hybrid intelligence. AI can help create learning content personalized to individual learners and fine-tune the monitoring and tracking of student performance to an extent like never before, but it can’t understand the emotional or psychological factors that affect learning and adapt to varying cultural differences when learning. Research in educational psychology suggests that “human and artificial intelligence collaboration for socially shared regulation in learning” could significantly improve educational outcomes (Atchley et al., 2024).

AI plays a growing role in the financial services industry for market analysis and risk management, however, purely automated systems cannot comprehend the psychological and cultural factors that drive market behaviour. Hybrid systems that incorporate AI data processing with human intuition into market psychology may generate more accurate analyses while mitigating algorithmic errors that ignore human behavior.

Social services may offer the most difficult challenges for hybrid intelligence application. AI can crunch huge volumes of social data and discern patterns beyond what humans can analyze, but it can’t grasp the psychological and cultural nuances involved in social issues. Hybrid systems using AI pattern recognition combined with human expertise in social psychology and cultural competence could enhance the effectiveness of social interventions.

The development of hybrid intelligence across these sectors will require careful attention to the specific psychological and cultural requirements of each domain. Generic AI systems are insufficient for applications requiring psychological insight, and successful hybrid systems must be designed with deep understanding of both technological capabilities and human expertise requirements.

Conclusion: Redefining the Role of Human Expertise in the AI Era

Hybrid intelligence systems are the outcome of a radically different conception of what is the relationship between human and artificial intelligence. Rather than viewing technological advancement as a zero-sum competition between human and machine capabilities, the hybrid approach recognizes that the most sophisticated analytical tasks require integration of computational power with human psychological insight.

The evidence presented throughout this analysis demonstrates that current AI systems, despite remarkable capabilities in pattern recognition and data processing, consistently fail to capture the psychological and cultural dimensions that provide meaning and context to complex analytical tasks. These limitations are not merely technical problems to be solved through better algorithms, but fundamental differences between computational processing and human understanding that emerge from lived experience and social interaction.

Quantum Space’s AI 1.5 process illustrates how these constraints can be overcome by the disciplined fusing of human psychological science with AI computational power. By considering human insight and machine learning as complementary instead of competing methods, the hybrid system can produce analytic results that are not available to either humans or machines alone.

The socio-psychological consequences of this change go beyond technical aspects and raise questions concerning human skill in a more and more automated world. Instead of rendering human sense of understanding useless, clever AI systems overemphasize the value of humans in interpreting, understanding, and making sense of the psychological, social, cultural significance of content. The best versions of AI will be that which makes more of, rather than replacing, what is uniquely human about us.

The future of data analysis in psychologically complex domains lies not in choosing between human expertise and artificial intelligence, but in creating sophisticated partnerships that leverage the strengths of both approaches. This hybrid methodology offers a blueprint for technological development that preserves and amplifies human capabilities while addressing the limitations of purely automated systems.

As AI systems become increasingly sophisticated, the distinctly human capabilities of psychological insight, cultural understanding, and empathetic interpretation become more rather than less valuable. The challenge for future development will be creating systems that integrate these capabilities effectively while ensuring that technological advancement enhances rather than diminishes human potential for understanding and meaning-making.