USPTO Patent US-20250272995-A1

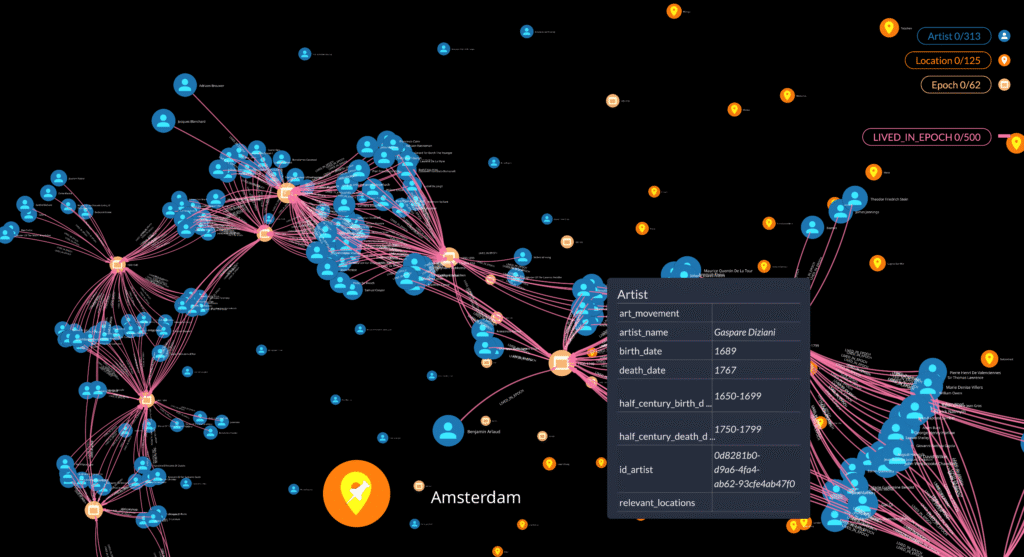

SYSTEMS, METHODS AND TECHNIQUES FOR ASCERTAINING OBJECT PROVENANCE AND/OR STATUS

Abstract

Systems, devices, and methods are disclosed for determining provenance (e.g., origin, authenticity) for an object using digital image data of one or more objects. A system receives, via a network, first digital image data of an object. The system determines from the first digital image data, a first set of feature variables, each corresponding to a characteristic of the object. The system determines, via an artificial intelligence model and with input including the first set of feature variables and a comparison dataset, an origin for the object. The artificial intelligence model generates, based on the portion of first feature variables that match corresponding portions of the comparison dataset, an output indicative of an origin. The system communicates, via the network, an indication of the provenance of the object.

Inventors: Federico Pignatelli della Leonessa (New York, NY), Francesco Rocchi (Ravenna, IT), Alberto Finadri (Castiglione Delle Stiviere, IT)

Applicant: Spacefarm LLC (New York, NY)

Family ID: 1000008490767

Assignee: Spacefarm LLC (New York, NY)

Appl. No.: 19/063075

Filed: February 25, 2025

Related U.S. Application Data

INTRODUCTION

[0003] Some of the most valuable assets in the world are collectibles and luxury items, such as coins, jewelry, gemstones, artwork, and other objects. As a result of their value, these types of objects are often the subject of unauthorized reproductions, elaborate forgeries, or one or more, potentially high-quality, counterfeit copies. For example, retailers large and small are struggling to combat the growing problem of counterfeit goods. Michigan State University conducted a 17-country study of Global Anti-Counterfeiting” and reported in 2023 that 68% of consumers were deceived into buying counterfeits at least once in the past year. Alhabash, S., Kononova, A., Huddleston, P. Moldagaliyeva, M., & Lee, H. (2023). Global Anti-Counterfeiting Consumer Survey 2023: A 17 Country Study. East Lansing, MI: Center for Anti-Counterfeiting and Product Protection, Michigan State University. https://a-capp.msu.edu/article/global-anti-counterfeiting-consumer-survey-2023/. Accordingly, before a proposed sale of a valuable object, the authenticity or origin of that object is often verified (or evaluated). In some instances, authenticity or origin of an object can be determined, for example, by an expert in object origins, history, and/or object authenticity. However, such experts may not exist for some types of objects. Further, even if expertise in a given type of object authenticity or object origin could be available, such expert is highly specialized and possess a skillset that requires many years of experience to develop. As a result, the cost associated with an expert analysis can be prohibitively high for a majority of objects on the market today, which possess substantial values and the corresponding risk of purchasing a forgery or counterfeit. Many objects may have sufficient value to create a need to verify the authenticity of their purported origin, but that value may also be insufficient to justify the cost of an expert analysis. For example, for many objects with a collector value (e.g., coins, stamps, etc.) currently on the market, the cost of an expert analysis may be more than the entire value of the object. Nevertheless, the objects worth less than the cost of a typical expert analysis may include many objects worth substantial amounts (e.g., several thousands of dollars or even tens of thousands of dollars).

[0004] Expert analysis may be inadequate in some instances, or may otherwise fall short. Experts are inevitably human and their work (or opinions) can be fallible. For example, as the potential value of an object increases, there can be greater tendency (or tension) that human factors come into play, such as relying on motivating factors (e.g., external influence, internal/external motivations), succumbing to prejudice, preference, bias, etc. And there is always a possibility that an expert can simply be wrong. While skill and experience can help to mitigate the human factor, additional methodologies and input for verifying, validating, and/or authenticating are desirable.

[0005] Another situation where expert analysis may fall short is when information is lacking, or where time constraints or cost considerations prevent obtaining all requisite and/or available information. Consider that the traceability of the location and/or ownership through the years may be unavailable. Lack of information can thwart even the most skilled and/or experienced expert.

[0006] Presently available technology to supplement, complement, or supplant expert analysis is presently prohibitively expensive. Sophisticated machines and processes provide additional information as to authenticity, but at a cost that generally is only justifiable for the most expensive of objects (e.g., greater than USD $250,000, or in some cases greater than USD $500,000).

[0007] Accordingly, there is a need for technology to verify provenance, an origin, and/or an authenticity of many objects more effectively and efficiently (e.g., at a cost that is lower than an average cost of an expert analysis (appraisal)) and/or that can verify a provenance, an authenticity, or an origin of an object with sufficient confidence for use in authenticating objects worth many thousands of dollars.

SUMMARY

[0008] The present disclosure provides at least a technical solution that is directed to determining a provenance and/or a present status of objects, such as determining authentication (or verification) information relating to an origin, source, and/or surface status of an object. The technical solution can be based on at least one or more images that depict the object (to be authenticated and/or for which the surface status is to be assessed) and one or more datasets with visual information that is based on image data for a plurality of separate objects.

Description

BRIEF DESCRIPTION OF THE FIGURES

[0009] These and other aspects and features of the present implementations are depicted by way of example in the figures discussed herein. Present implementations can be directed to, but are not limited to, examples depicted in the figures discussed herein. Thus, this disclosure is not limited to any figure or portion thereof depicted or referenced herein, or any aspect described herein with respect to any figures depicted or referenced herein.

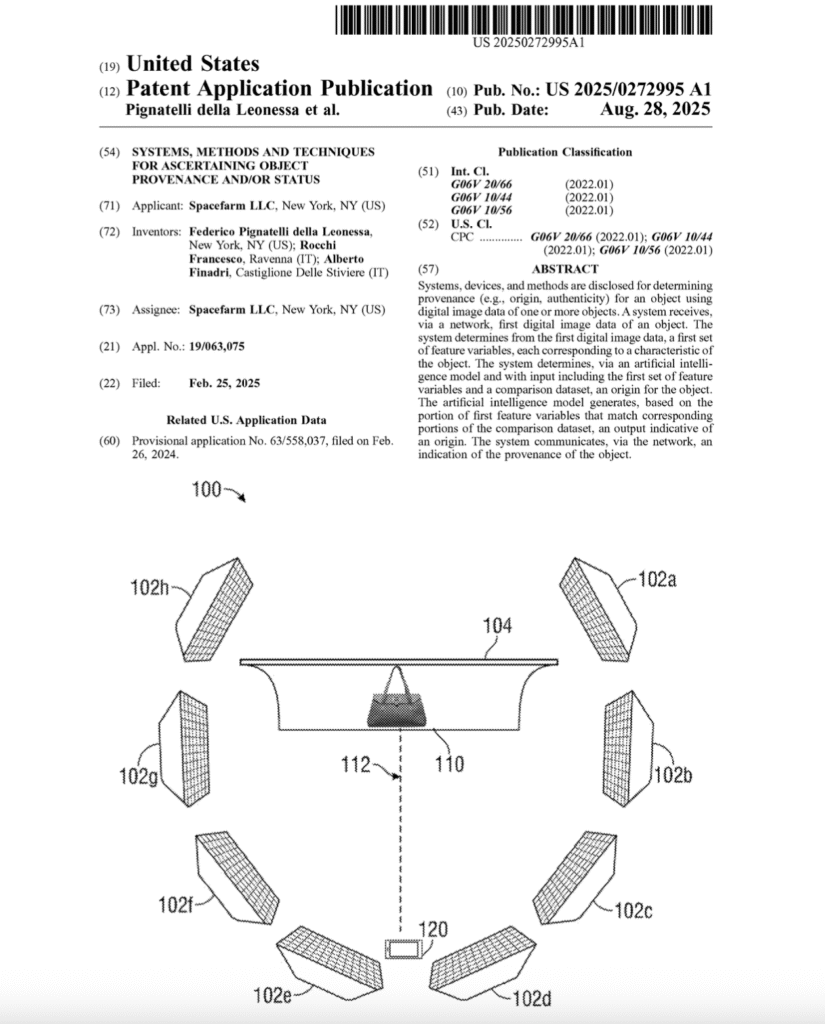

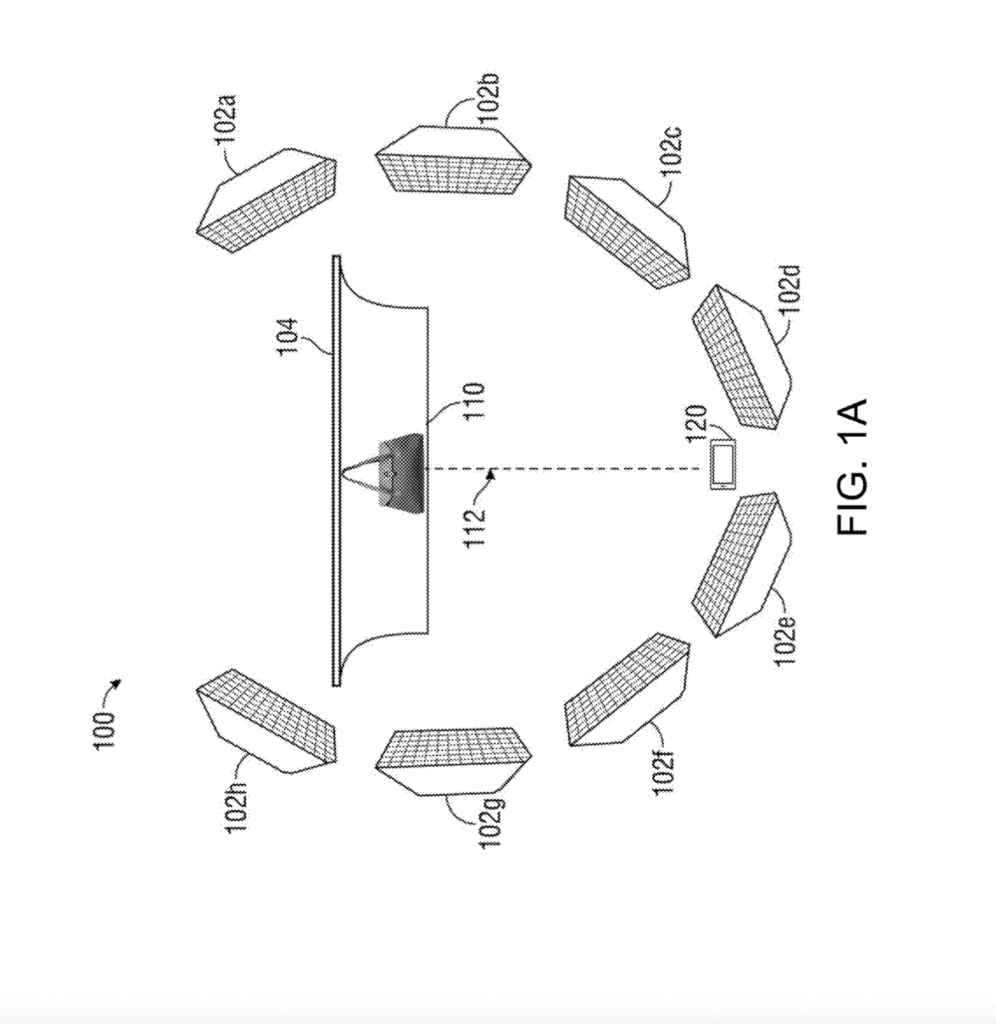

[0010] FIG. 1A is a diagram of a client device acquiring digital image data of an object at a first distance (e.g., for a data archive with comparison visual information), according to one embodiment of the present disclosure.

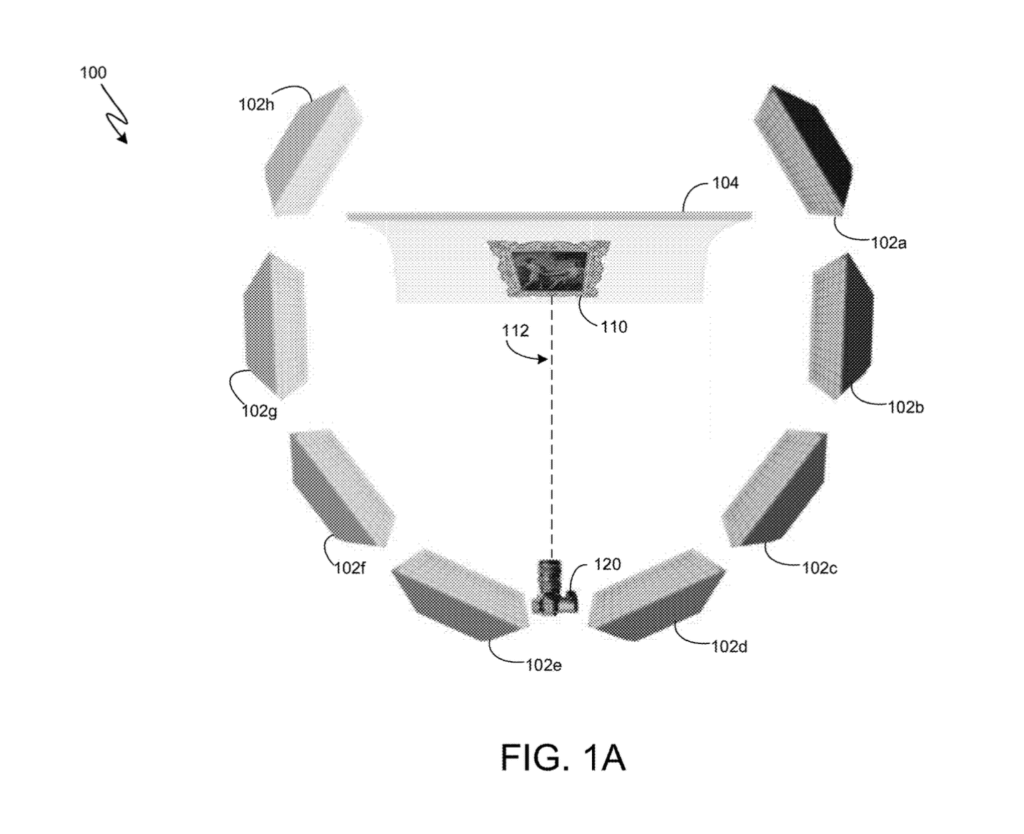

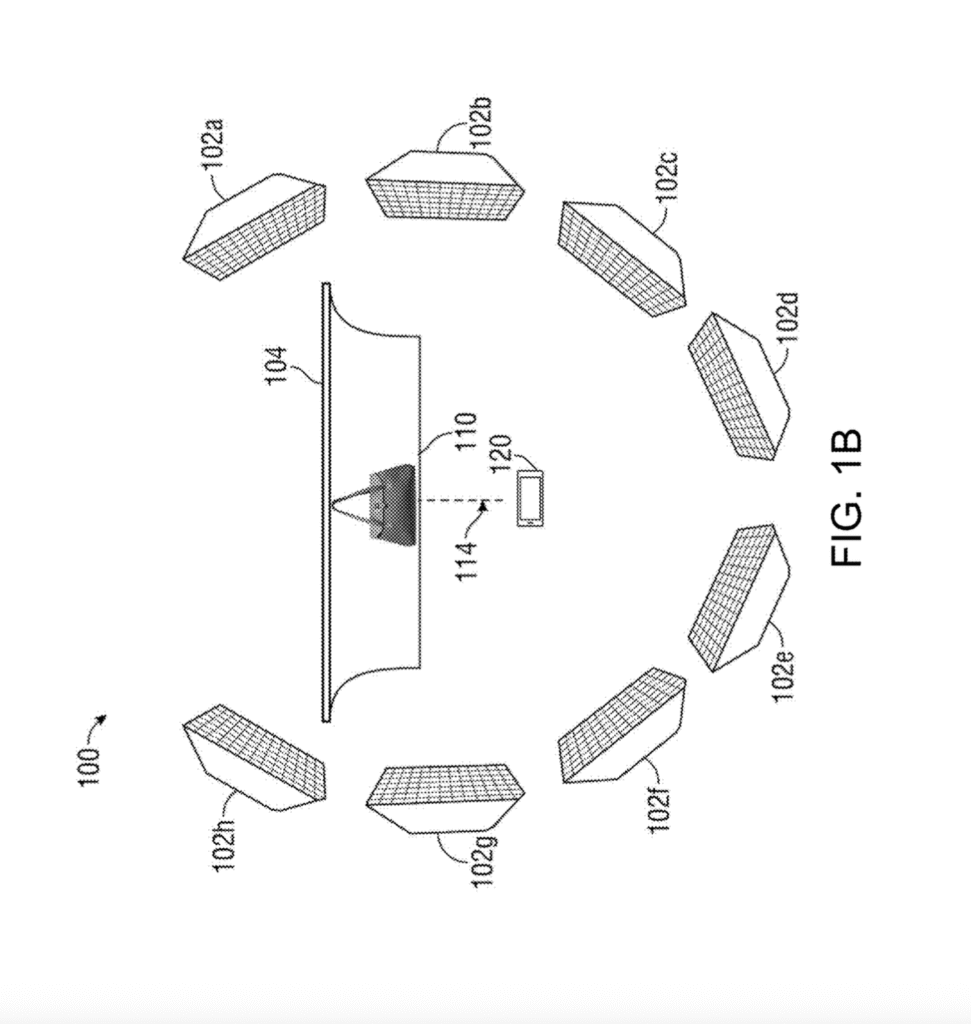

[0011] FIG. 1B is a diagram of a client device acquiring digital image data of an object at a second distance (e.g., for a data archive with comparison visual information), according to one embodiment of the present disclosure.

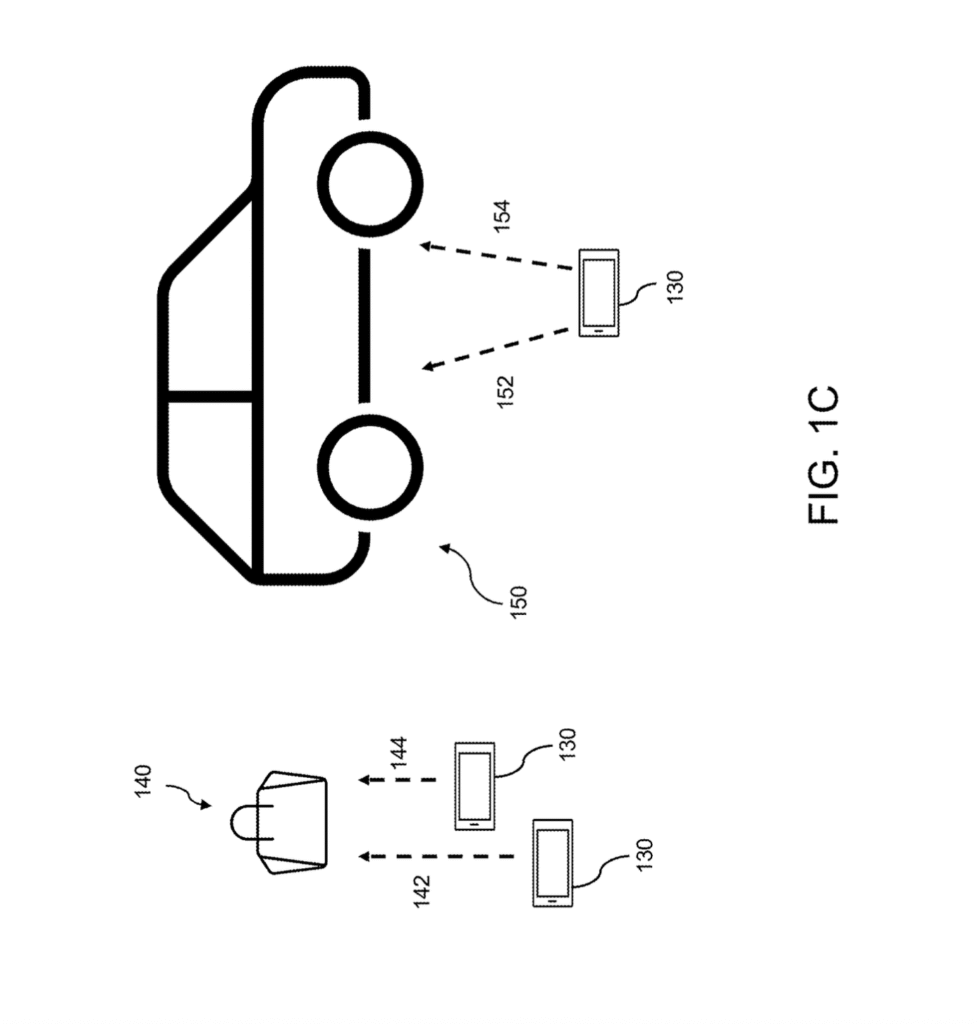

[0012] FIG. 1C is a diagram of a client device acquiring digital image data (e.g., by a user) of an object to be evaluated, according to one embodiment of the present disclosure.

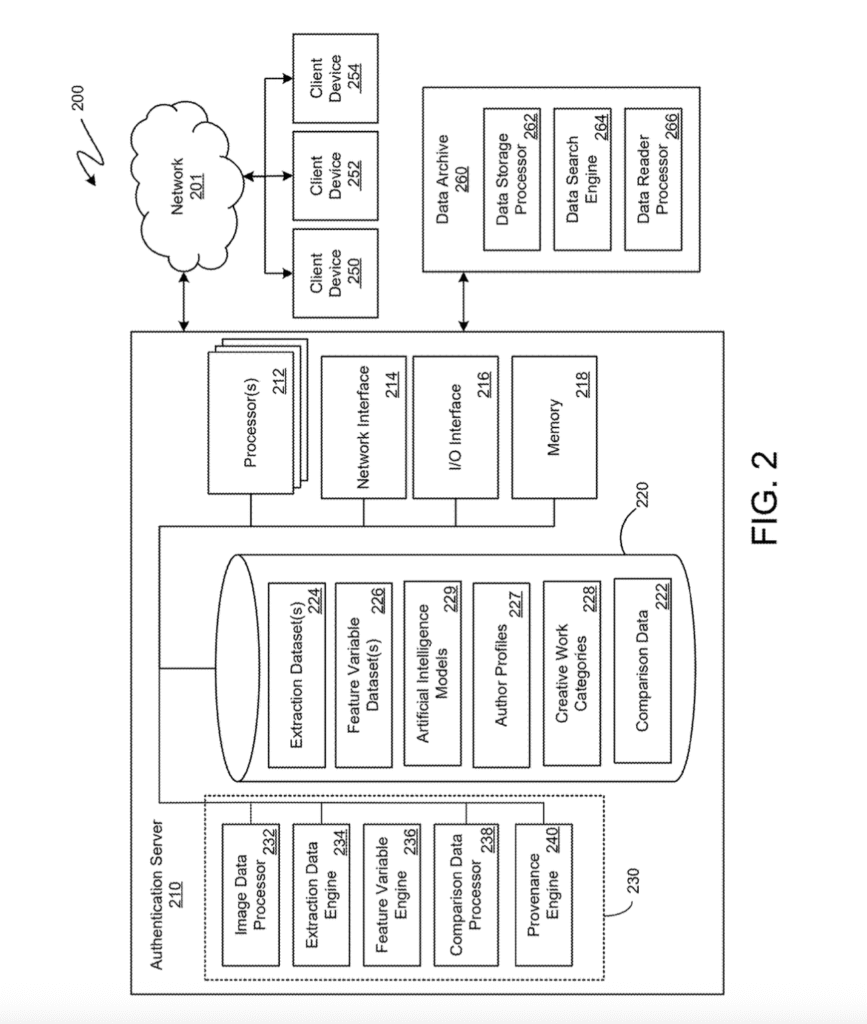

[0013] FIG. 2 is a block diagram of an object provenance system, according to one embodiment of the present disclosure.

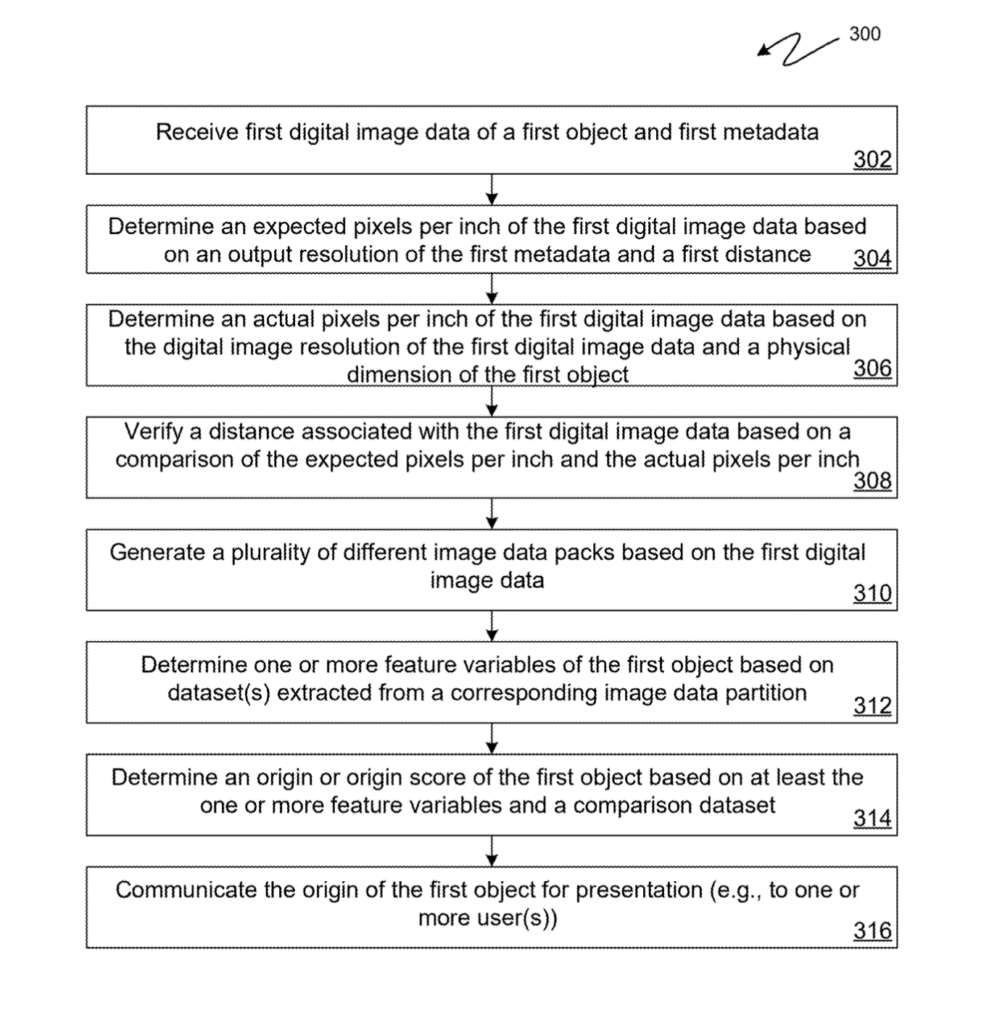

[0014] FIG. 3 is a flow diagram of a method of digital image data acquisition, according to one embodiment of the present disclosure.

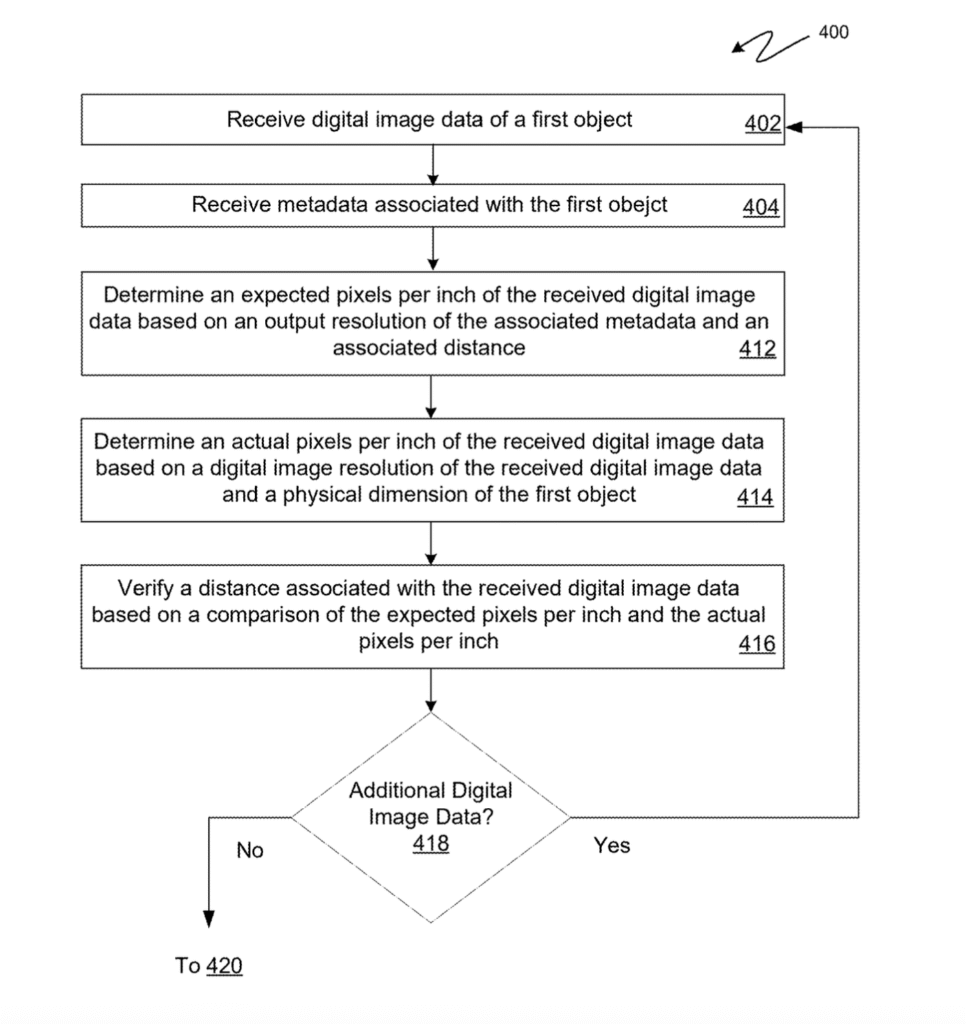

[0015] FIG. 4A is a flow diagram of a method to generate an object origin score, according to one embodiment of the present disclosure.

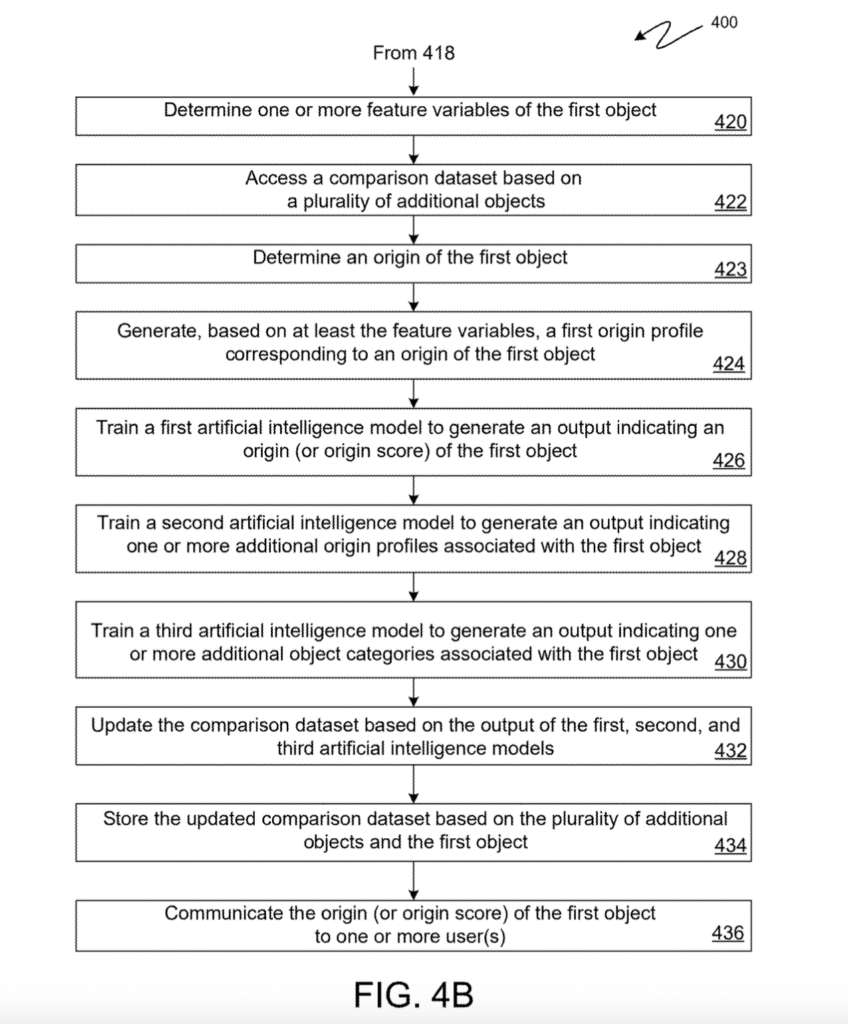

[0016] FIG. 4B is a flow diagram of a method to generate an object origin score, according to one embodiment of the present disclosure.

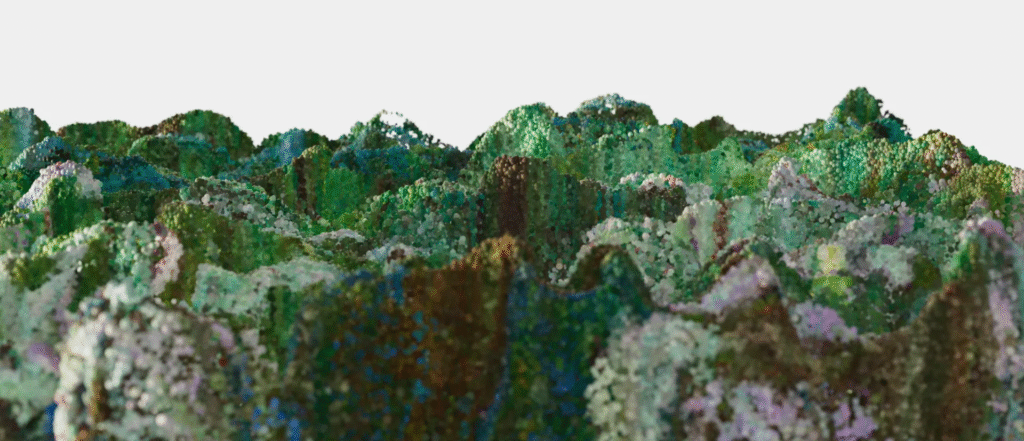

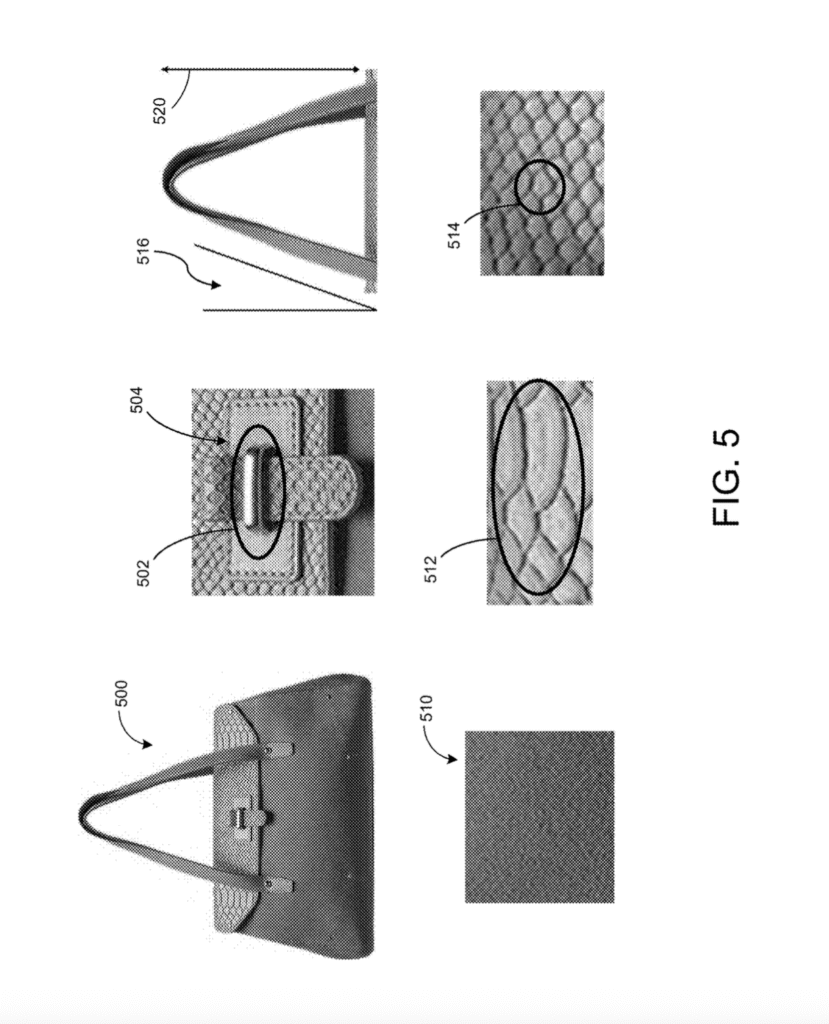

[0017] FIG. 5 depicts example object features captured in image data of the object, according to one embodiment of the present disclosure.

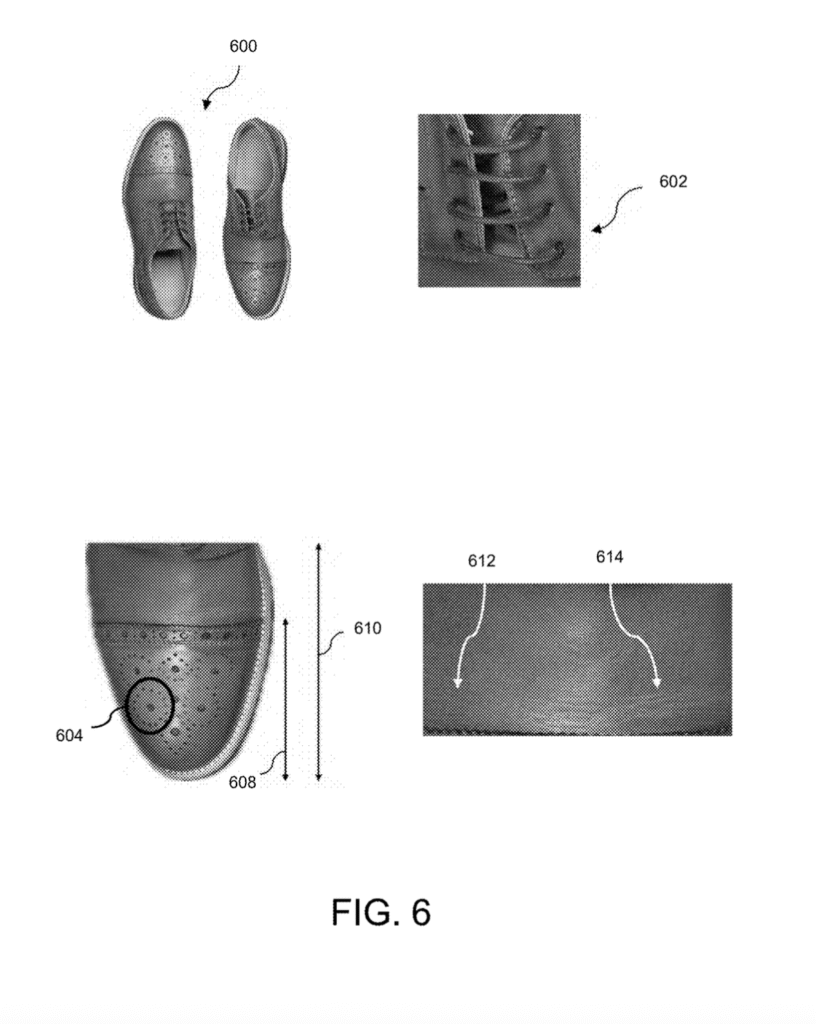

[0018] FIG. 6 depicts additional example object features of captured in image data of the object, according to one embodiment of the present disclosure.

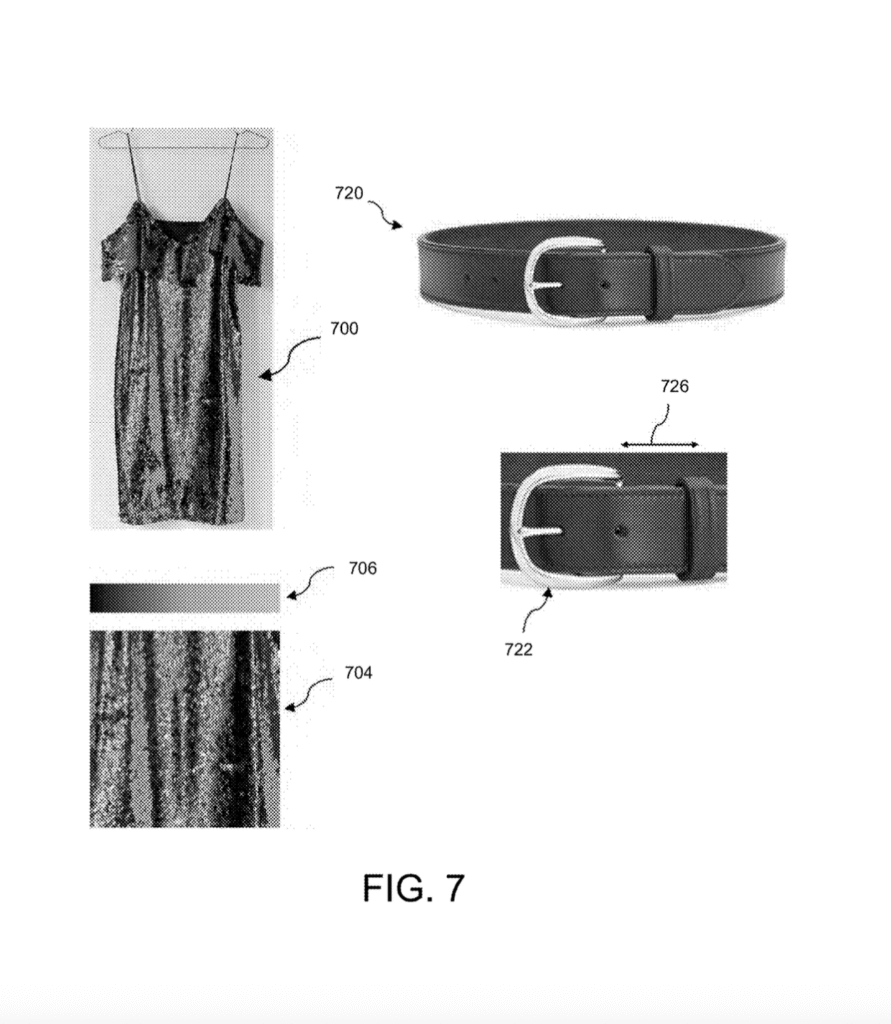

[0019] FIG. 7 depicts additional example object features captured in image data of the object, according to one embodiment of the present disclosure.

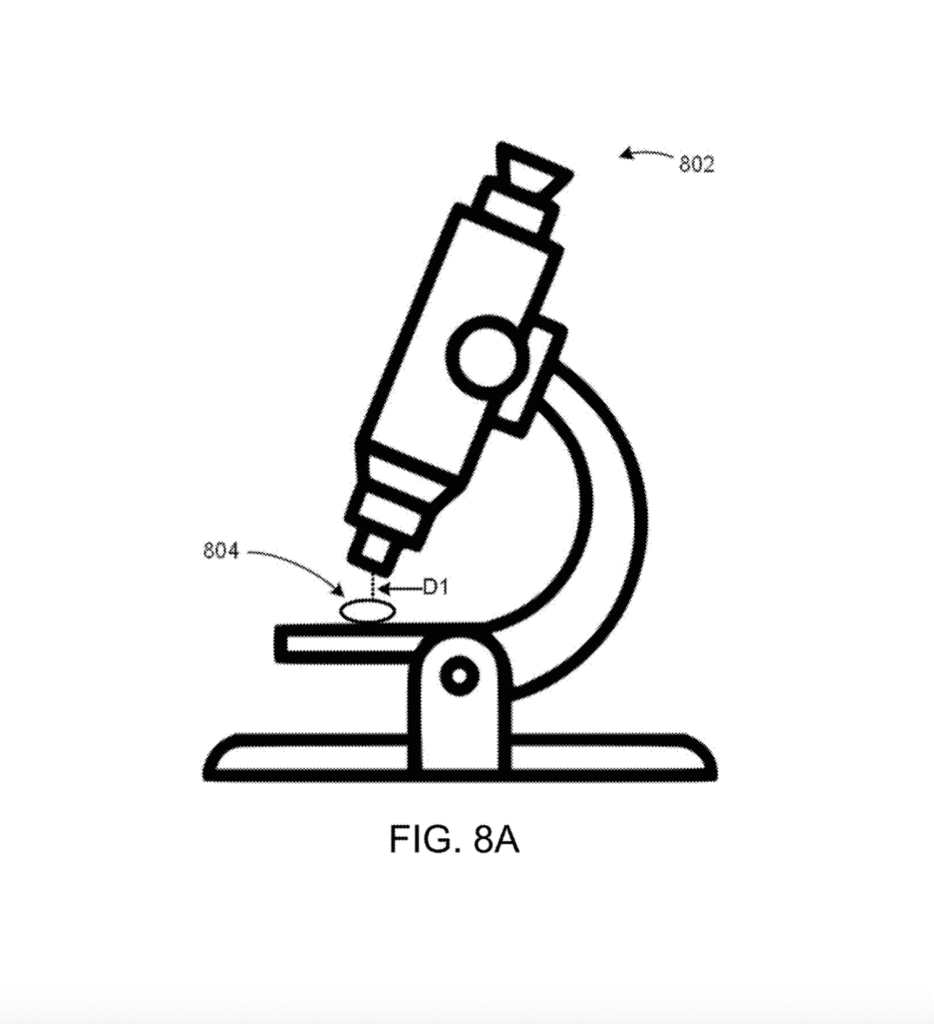

[0020] FIG. 8A is an example of acquiring digital image data of an object at a first distance, according to one embodiment of the present disclosure.

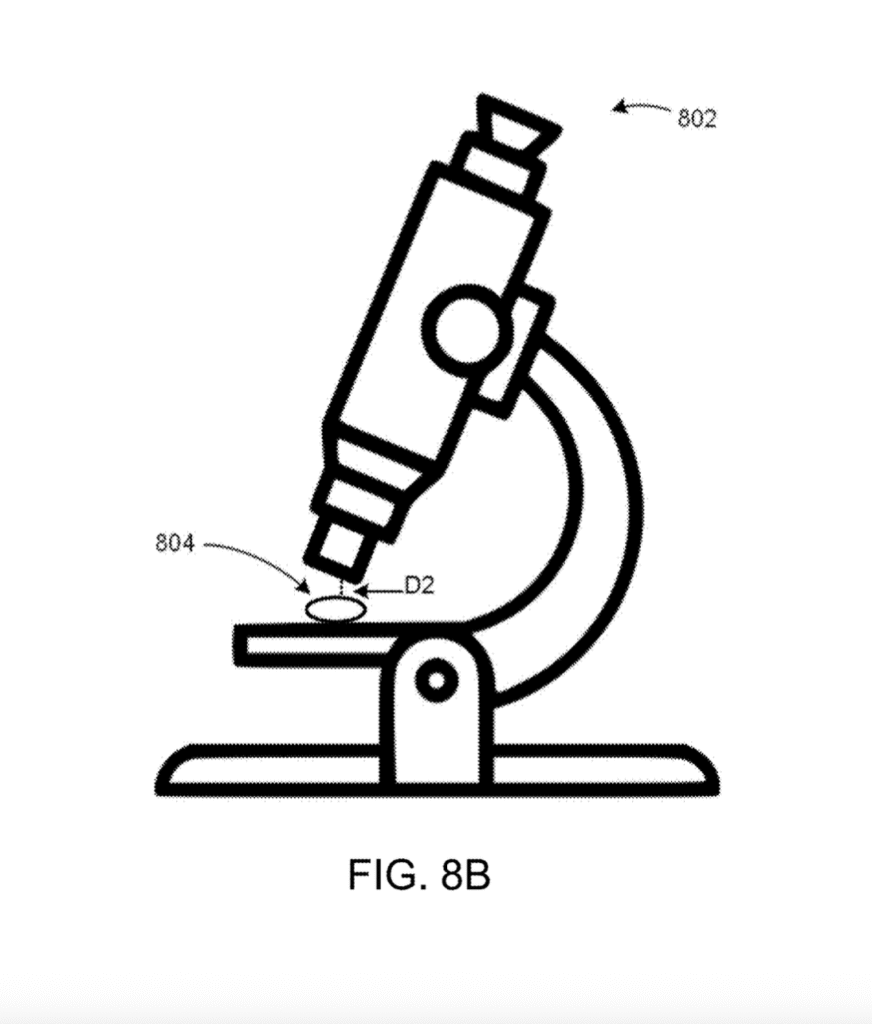

[0021] FIG. 8B is an example of acquiring digital image data of an object at a second distance, according to one embodiment of the present disclosure.

DETAILED DESCRIPTION

[0022] The present disclosure provides at least a technical solution directed to determining a provenance of objects (e.g., gemstones, coins, collectors cards, stamps, paintings, drawings, sculptures, luxury goods (e.g., accessories, clothing items), automobiles, watches, artisanal works, semi-artisanal works, etc.). The disclosed embodiments may provide authentication (or verification) of the origin or source (e.g., associated origin(s) and/or source(s)) of an object. The technical solution can be based on at least an image dataset that depicts the object to be authenticated and a comparison dataset that is based on image data for a plurality of separate objects.

[0023] The present disclosure provides at least a technical solution directed to determining a surface status of objects. In example embodiments, a surface status and conditioning report could indicate status changes and the condition of an object. Such embodiments could be used, for example, in applications such as car rentals or vault storage, where identifying differences, scratches, or changes in an object’s surface are useful. Such functionality could be used, for example, in insurance disputes and the preservation of valuable items (such as vehicles).

[0024] Various embodiments of the disclosed approach may involve: data acquisition and digitization of high-quality images of an object, data extraction, and artificial intelligence (AI) training (see, e.g., image capture depicted in FIGS. 1A and 1B); data storage and archiving of the high-quality images following strict protocols; acquisition of new data from users and uploading of the new data (see, e.g., image capture depicted in FIG. 1C); and comparison of the user-submitted data with data stored in one or more archived datasets to verify the authenticity and/or status of the object.

[0025] Aspects of this technical solution are described herein with reference to the figures, which are illustrative examples of this technical solution. The figures and examples below are not meant to limit the scope of this technical solution to the present implementations or to a single implementation, and other implementations in accordance with present implementations are possible, for example, by way of interchange of some or all of the described or illustrated elements. Where certain elements of the present implementations can be partially or fully implemented using known components, only those portions of such known components that are necessary for an understanding of the present implementations are described, and detailed descriptions of other portions of such known components are omitted to not obscure the present implementations. Terms in the specification and claims are to be ascribed no uncommon or special meaning unless explicitly set forth herein. Further, this technical solution and the present implementations encompass present and future known equivalents to the known components referred to herein by way of description, illustration, or example.

[0026] FIG. 1A is a diagram of an image data acquisition environment 100, according to one embodiment of the present disclosure, including a client device 120 configured to collect, at a first distance 112, digital image data of an object 110. As used herein, digital image data may be based on images, video, or a combination of images and video. The client device 120 is configured to capture a digital image dataset (e.g., a plurality of digital images and associated metadata) of the object 110 from a first distance 112. The digital image dataset (e.g., digital image data comprising first digital image data, second digital image data, third digital image data, fourth digital image data, etc. and associated metadata respectively) can be used for an authentication of, or the generation of a provenance score, an authenticity score, or origin score for, the object 110. In an aspect, the digital image dataset can be used for an determining an origin of the object 110.

[0027] In some embodiments, the client device 120 comprises a digital imaging device (e.g., a CMOS or CCD image sensor of a digital imaging device, including the digital camera of a smartphone or other mobile device, a full frame digital camera (e.g., a Digital Single-Lens Reflex (“DSLR”) camera) and the like) capable of acquiring digital image data that adheres to one or more minimum specifications of acceptable digital image data. Stated differently, the client device 120 may adhere to one or more minimum specification of an acceptable digital imaging device (e.g., minimum specifications of the client device 120).

[0028] For example, the client device 120 can, in some embodiments, include any full frame digital camera, or digital imaging device, configured to output digital image data in a raw image file format and with at least an image resolution of 30 megapixels (e.g., a minimum of 30 megapixels, a minimum number of pixels-per-inch of the digital images output by the client device, a minimum size of the pixels of the client device’s image sensor, etc.), but is not limited thereto (e.g., the client device 120 can include digital image devices with less than 30 megapixel imaging resolution, such as 15 megapixel image resolution). Alternatively, in some embodiments, the client device 120 can include a full frame digital camera and one or more computer(s) coupled to the digital camera to receive the digital image data that it collects. Thus, the client device 120 need not be limited to a digital camera (e.g., the camera shown in FIGS. 1A and 1B) and it can include, in some embodiments, one or more processors (e.g., a desktop computer, laptop, smartphone, tablet, etc.) coupled to a digital camera (e.g., configured to receive the digital image data collected by the digital camera) and capable of communicating the digital image data collected by the digital camera to a server (e.g., the server 210 of FIG. 2) via a communications network (e.g., communications network 201 of FIG. 2).

[0029] As another example, in some embodiments the digital imaging device may be integrated with or operable in conjunction with a magnification device, such as a microscope. (See FIGS. 8A and 8B.)

[0030] In some embodiments, the client device 120 can be configured to capture digital image data (e.g., image data of the object 110) in a raw digital image file format. and associated metadata. For example, the client device 120 can output raw digital image files (e.g., raw digital image data) with associated metadata that can include one or more of the following: output resolution, sensor metadata (e.g., the size of the digital sensor, the attributes of the sensor’s Color Filter Array (“CFA”) and its color profile, etc.), image metadata (e.g., the exposure settings, camera model, lens model, etc.), the date and location of the shot/image, user information, and any other information to accurately (e.g., correctly) interpret the digital image data collected by the client device 120. Additionally, in some embodiments, the associated metadata of the digital image data (e.g., metadata of raw digital image files) output by the client device 120 can indicate, or can be used by the systems and methods of the present disclosure to determine that, the digital image data is the original (e.g., unmodified) data as it was collected by the image sensor of the client device 120. Thus, in some embodiments, the metadata associated with the digital image data collected by the client device 120 can be used to verify that the corresponding digital images have not been modified, altered, or otherwise changed from the image data collected by the image sensor of the client device 120.

[0031] In some embodiments, the client device 120 can include a physical support structure (not shown), including, for example, a tripod, gimble, adjustable stand, or other suitable support structure that can be configured to retain the client device 120 in a fixed position relative to the object 110. For example, the client device 120 can be disposed on a tripod that is configured to retain the client device 120 at the first distance 112 relative to the object 110 and with the object 110 disposed with a fixed position within the field of view for the client device 120 (e.g., configured to keep object 110 at the position with the FOV for the client device 120 set by a user of the client device 120). In some embodiments, the client device 120 can be configured on a mechanized support structure, which is configured to position the client device 120 relative to the object 110 (e.g., according to one or more positioning sensors configured to allow the mechanized support to determine the position of the client device 120 relative to the object 110) and automatically collect the image data of the object 110 at a variety of distances (e.g., the first distance 112 and the second distance 114, shown in FIG. 1B) and for on a variety of different regions of the object 110 (e.g., a foreground, a background, a subject, one or more quadrants, and the like).

[0032] The client device 120 can acquire digital image data or any portion thereof. For example, the client device 120 need not acquire all of the digital image data and, in some examples, the digital image data may be acquired by a plurality of different client devices. The digital image data (e.g., acquired by any number of client devices) can comprise one or more separate digital images of the object 110 viewed at the first distance 112. Accordingly, the present disclosure is not limited to digital image data acquired by a single client device or that is otherwise associated with (e.g., provided by, received from, etc.) any particular number of different sources of the image data. Examples of the present disclosure, therefore, can include any number of client devices (e.g., three different client devices that are each at separate locations (e.g., in different states) and that may individually, or relative to each other, acquire image data at any number of different times (e.g., acquire image data on, or over, different times, days, months, and years).

[0033] The one or more digital images of the object 110 may comprise, for example, an image of the entire object 110 (e.g., an entire surface of a luxury good, gemstone, a surface of a coin, an image surface of a stamp, an entire canvas, a painting with its frame, an entire sculpture, etc.) with a minimum border of space between the object and the edges (e.g., frame) of the digital image. In different example embodiments, the image may include the entire surface of the object, a majority of the surface of the object, or representative portions or segments of the object. The entire surface may be used if, for example, the overall dimensions (length, height, width, volume, etc.) of the object or the consistency or variation in certain features across the surface are to be considered, whereas less than the entire surface may be used if, for example, the features to be considered are available in the portion(s) captured in the image(s). In some examples of the present disclosure, the object 110 can be one or more different types of valuable objects and need not be limited to creative works such as paintings and sculptures. For example, in some embodiments, the object 110 can include any of the following, non-limiting and non-exhaustive, examples: a gemstone, jewelry, one or more luxury goods (e.g., handbags, wallets, shoes, boots, items of clothing such as dresses, and accessories such as belts, watches, etc.), one or more collectable stamps or other printed media (e.g., baseball cards, etc.), one or more coins (e.g., collector’s coins), one or more precious metals, photographic prints, among other examples.

[0034] For example, in some embodiments, the present disclosure may be used to recognize, certify, and authenticate one or more luxury products (e.g., purses, handbags, shoes, clothing, jewelry, etc.) and may provide, based on manufacturing features, design features, or product composition features, an individualized ‘fingerprint’ or dataset to identify copies of a particular luxury product. As another example, the present disclosure can be used for quality assurance of pharmaceutical products. As another example, the present disclosure can be used for quality assurance of a manufacturing process, more generally. The present disclosure can confirm an object’s compliance with one or more manufacturing quality standards and/or determine a product’s authenticity at any step within the product’s supply chain. Additionally, the present disclosure may, in some examples, be used to determine quality of edible goods, determine authenticity of physical currency and other physical financial instruments, satellite imagery analyses, spatial imagery analyses, and medical imagery analyses.

[0035] The digital image data captured by the client device 120 at the first distance 112, can include an image of the object 110 that is a complete image that includes all of the object 110 in a single image without any portion of the object 110 ‘touching’ (e.g., coming into contact with) any of the edges of the digital image or a specified margin around the perimeter of the digital image. Additionally, the one or more digital images of the object 110 at the first distance 112 can include one or more additional images of the object 110 at the first distance 112 (e.g., one or more images of a feature, edge, detail, or other portion of the object 110).

[0036] Accordingly, in some embodiments, the first distance 112 may be a distance that is determined, in part, by the physical dimensions of the object 110 (e.g., to enable the client device 120 to capture the entire object 110 in a single image). For example, a first distance 112 may be a shortest distance between the client device 120 and the object 110 at which the client device 120 can capture a correctly framed (e.g., within specified minimum margins) digital image of the object 110. Alternatively, in some embodiments, the first distance 112, may be determined, in part, according to a specified minimum threshold distance between the client device 120 and the object 120 (e.g., shortest distance, above a minimum distance, that produces a fully framed image of the object 110).

[0037] In some embodiments, the data acquisition environment 100 can include one or more lighting systems 102a–102f configured to illuminate the object 110 and enable the client device 120 to collect accurate image data of the object 110. In some embodiments, a backdrop 104 may also facilitate collections of image data of the object 110.

[0038] FIG. 1B is a diagram of the client device 120 configured to acquire digital image data of the object 110 at a second distance 114, according to one embodiment of the present disclosure. The client device 120 can acquire digital image data that comprises a plurality of digital images of the object 110 at the second distance 114. For example, in some embodiments, the client device 120 can be positioned at the second distance 114 to output digital images of only specific portions (e.g., less than the entirety) of the object 110. For example, at the second distance 114, the client device 120 can collect one or more digital images of specific regions of the object 110 (e.g., image data of the object 110 divided into a single quadrant, or fourth, in each individual image, image data collected by dividing the object into 6, 9, 12, or more sections, etc.). In some embodiments, the client device 120 can collect image data based on a specified physical size (e.g., images that are 1×1 inches, 3×3 inches, etc.) and based on different features, details, edges, properties, or other portions that may exist within the object 110, including, for example, collecting digital images of an edge region, a center region, a detail region, a damage region, a rear surface region, an inclusion region, and the like. In some embodiments, the client device 120 may be substituted with a different client device (e.g., a similar or substantially identical client device to the client device 120, as may allow for ease and convenience of not moving the client device 120 between different distances from the object).

[0039] In some embodiments, for example, the client device 120 may capture image data of the object comprising at least the following: at least two digital images of the entire object at a first distance 112; at least two digital images of the object, each collected at a second distance 114, less than the first distance 112, and with the client device 120 positioned directly in front of the detail of the object to be captured in the digital image(s); at least two closeup detail images, each image capturing separate details of the object, the client device 120 positioned directly in front of the detail(s) to be imaged, and collected at a third distance that is less than the second distance 114 (e.g., at a third distance that is between 5 and 15 centimeters, at a third distance that is between 1 and 5 centimeters, or at a third distance that is between 0.01 and 0.1 centimeters, etc.); at least one image of the entire backside of the object (e.g., digital image 602, shown in FIG. 6); at least one backside detail image (e.g., support image data 604, shown in FIG. 6), and at least two images of separate details with the client device 120 positioned at a 45 degree angle relative to a surface of the object 110 (e.g., with the imaging device angled 45 degrees from the edge of a planar surface of a gemstone).

[0040] FIG. 1C depicts example embodiments in which a user obtains visual information of an object to be evaluated. It is noted that the procedure depicted in FIGS. 1A and 1B (e.g., one for data archival that uses higher-quality images, such as images obtained using added lighting in a “studio” set up) need not be employed by the user wishing to evaluate an object’s provenance and/or surface status. As depicted in FIG. 1C, a client device 130 (e.g., a smartphone or other image capture device, which can be the same as, or different from, client device 120) can be used to image an object. In FIG. 1C, a handbag 140 is depicted on the left, and a vehicle 150 is depicted on the right. In various embodiments, the user may obtain one image or multiple images. If multiple images are acquired, in different examples, one or more first images 142 may be from a first distance, and one or more second images 144 may be from a second distance from the object. Similarly, if multiple images are acquired, one or more first images 152 may be from a first perspective/angle 152, and one or more second images 154 may be from a second perspective/angle 154. It is noted that, alternatively or additionally, video imagery may be obtained as a user moves the client device 130 to different positions relative to the object to be evaluated. In some embodiments, multiple different client devices may be used (e.g., a first client device that is a smartphone camera and a more specialized second client device that detects light differently, such as one that is better at capturing light of certain wavelengths).

[0041] In various embodiments, is thus possible to capture or otherwise use any combination of one or more of: a single image from a first angle; a single image from a second angle; a single image from a first distance; a single image from a second distance; multiple images comprising any combination of a plurality of a first image from the first angle and the first distance, a second image from the first angle and the second distance, a third image from the second angle and the first distance, and a fourth image from the second angle and the second distance; and/or one or more segments (“clips”) with video imagery as the client device is stationary with respect to, or moved relative to, the object (e.g., moved between the first distance, the second distance, the first angle, and/or the second angle). It is noted that because video imagery may be processed by the client device 130 before being saved and output, and because such processing may remove or obfuscate certain visual information, it may be preferable to have “raw” or unprocessed images and video (rather than images or video processed, e.g., to account for camera jitter, enhance lighting, or reduce blurring). Once the image(s) and/or video are captured, they may be, for example, uploaded or otherwise transmitted to an object authenticity system for evaluation of provenance and/or surface status.

[0042] FIG. 2 is a block diagram of an object provenance system 200, according to one embodiment of the present disclosure. The system 200 can include the authentication server 210 in communication with one or more client devices 250, 252, 254 via a communication network 201.

[0043] The client devices 250, 252, and 254 may be any suitable digital imaging device (or combination of imaging device and one or more processors and/or computing devices) that is capable of collecting image data of an object and communicating, via the network 201, the collected digital image data to the authentication server 201, as described above with reference to the client device 120 shown in, and described with reference to, FIGS. 1A and 1B. For example, the client devices 250, 252, and 254 may include, in some embodiments, a full frame digital camera coupled to one or more processors capable of communications (e.g., transmitting digital image data of an object, including metadata associated with one or more collected digital images) via the network 201. In that example, the digital camera of the one or more client devices 250, 252, and 254 may be a full frame digital camera capable of outputting digital images in a raw image file format and with a digital resolution of at least 30 Megapixels. The one or more processors coupled to the digital camera may include a laptop or mobile (e.g. smartphone or tablet) computing device that is capable of receiving the digital image data collected by the digital camera and communicating it, via the network 201 (e.g., the internet), to one or more authentication servers (e.g., authentication server 210). As described previously, with reference to FIGS. 1A and 1B, a variety of different configurations are contemplated for each, or both, of the client device(s) and/or the authentication server 210, or any of the components or data included in either, or both, of those.

[0044] The authentication server 210 can include one or more processors 212, a network interface 214, an input/output (“I/O”) interface 216, and a memory 218. The one or more processors 212 may include one or more general purpose devices, such as an Intel®, AMD®, or other standard microprocessor. Alternatively, or in addition, in some embodiments, the one or more processors 212 may include a special purpose processing device, such as ASIC, SoC, SiP, FPGA, PAL, PLA, FPLA, PLD, or other customized or programmable device. The one or more processors 212 can perform distributed (e.g., parallel) processing to execute or otherwise implement functionalities of the presently disclosed embodiments. The one or more processors 212 may run a standard operating system and perform standard operating system functions. It is recognized that any standard operating systems may be used, such as, for example, Microsoft® Windows®, Apple® MacOS®, Disk Operating System (DOS), UNIX, IRJX, Solaris, SunOS, FreeBSD, Linux®, ffiM® OS/2® operating systems, and so forth.

[0045] The network interface 214 may facilitate communication with other computing devices and/or networks such as the communications network 201 and the client devices 250, 252, and 254 and/or other devices (e.g., one or more additional authentication server(s)) and/or communications networks. The network interface 214 may be equipped with conventional network connectivity, such as, for example, Ethernet (IEEE 802.3), Token Ring (IEEE 802.5), Fiber Distributed Datalink Interface (FDDI), or Asynchronous Transfer Mode (ATM). Further, the network interface 214 may be configured to support a variety of network protocols such as, for example, Internet Protocol (IP), Transfer Control Protocol (TCP), Network File System over UDP/TCP, Server Message Block (SMB), Microsoft® Common Internet File System (CIFS), Hypertext Transfer Protocols (HTTP), Direct Access File System (DAFS), File Transfer Protocol (FTP), Real-Time Publish Subscribe (RTPS), Open Systems Interconnection (OSI) protocols, Simple Mail Transfer Protocol (SMTP), Secure Shell (SSH), Secure Socket Layer (SSL), and so forth.

[0046] The I/O interface 216 may facilitate interfacing with one or more input devices and/or one or more output devices. The input device(s) may include a keyboard, mouse, touch screen, scanner, digital camera, digital imaging sensor(s), light pen, tablet, microphone, sensor, or other hardware with accompanying firmware and/or software. The output device(s) may include a monitor or other display, printer, speech or text synthesizer, switch, signal line, or other hardware with accompanying firmware and/or software.

[0047] The memory 218 may include static RAM, dynamic RAM, flash memory, one or more flip-flops, ROM, CD-ROM, DVD, disk, tape, or magnetic, optical, or other computer storage medium. The memory 218 may include a plurality of engines (e.g., program modules or program blocks) 230 and program data 220. The memory 218 may be local to the authentication server 210, as shown, or may be distributed and/or remote relative to the communication authentication and verification server 210.

[0048] The memory 218 may also include program data 220. Data generated by the system 210, such as by the engines 230 or other components of server 210, may be stored on the memory 218, for example, as stored program data 220. The stored program data 220 may be organized as one or more databases. In certain embodiments, the program data 220 may be stored in a database system. The database system may reside within the memory 218. In other embodiments, the program data 220 may be remote, such as in a distributed (e.g., cloud computing, as a remote third-party service(s), etc.) computing and/or storage environment. For example, the program data 220 may be stored in a database system on a remote computing device. In still other embodiments, portions of program data 220m, including, for example, one or more output(s) generated by the engines 230, may be stored in a content addressable storage system, such as a blockchain data storage system.

[0049] The authentication server 210 further includes server data 220, comprising a comparison data 222, one or more extraction datasets 224, one or more feature variable datasets 226, origin profiles 227, object categories 228, and one or more artificial intelligence models 229.

[0050] The comparison data 222 may include one or more datasets based on (e.g., generated from), the digital image data of a plurality of additional objects. The comparison data 222 may include, for example, the digital image data collected for each of the additional objects in the plurality of additional objects (e.g., each digital image dataset of an additional object that has been received by the authentication server 210). In some embodiments, the comparison data 222 may include one or more imagery datasets of each additional object and each additional object is a similar type of object as the object to be authenticated. For example, for authentication of an object that is a gemstone, the comparison data 222 may include one or more imagery datasets of gemstones, including, for example, one or more gemstones of the same gemstone type as the object or gemstone being authenticated (e.g., for authentication of a ruby, the comparison data 222 may include one or more imagery datasets of one or more rubies).

[0051] Additionally, the comparison data 222 may include one or more of the feature variable datasets 226, including a set of one or more feature variables for each of the additional objects of the plurality of additional objects (e.g., one or more feature variable(s) previously determined, by the authentication server 210 and/or feature variable engine 236, for an additional object and based on the corresponding digital image dataset received by the server 210 or image data processor 232). For example, the comparison data 222 may include a feature variable dataset 226 for each additional object that includes the one or more feature variables determined (e.g., by the feature variable engine 236) for that same object. In some embodiments, the comparison dataset may include a subset of the feature variables determined for an additional object and included in the corresponding feature variable dataset 226.

[0052] Each set of feature variables included in the comparison data can be comprised of any combination of one or more of the different types of feature variables included in the feature variable datasets 226 and described in greater detail below, with reference to the feature variable datasets 226.

[0053] The one or more extraction datasets 224 can include one or more datasets extracted or otherwise generated from the received image data (e.g., the image data received for the object to be authenticated). Alternatively, or in addition, in some embodiments, the extraction datasets 224 can include extraction data generated from one or more imagery datasets, or generated by the extraction engine 234 based on the image data of the object. In some embodiments, the extraction engine may generate one or more different types of extraction datasets, including, for example, one or more object color (e.g., an object inclusions, body color, detail color, highlight color, black distribution, chromatic spectrum, chromatic hatching, and chromatic volumes) extraction datasets, one or more object volumetric (e.g., object shape, object patterns, object depth, object image, object profile, and/or object details) extraction datasets (e.g., the volumetric extraction dataset 510 and the detail extraction dataset 512, shown in FIG. 5), and one or more image (e.g., collectable stamp image(s), coin image(s), signature detail(s), calligraphic brush pattern(s), backside pattern, print pattern, stamp pattern, etc.) extraction datasets.

[0054] Alternatively, or in addition, the extraction engine 234 may generate one or more extraction datasets that can include separately or in combination, for example, reflectivity data, opacity data, material data, print data (e.g., for stamps, trading cards, and/or coins), palette data, mid-tones data, pattern data, campiture data, pigment density data, signature material data, signature color data, signature evaluation data, and evaluation of pressure points data.

[0055] The one or more feature variable datasets 226 can include each set of feature variables determined for an object during operation of the system 200 (e.g., during the operation of the server 210 to determine an origin of, generate an origin score for, generate an authenticity score of, or otherwise ascertain authenticity of, that object). The feature variable datasets 226 can include a plurality of feature variable datasets with one or more feature variables determined for a particular (e.g., a single specific) object. The feature variables stored in the various sets of the feature variable datasets 226 can include, for example, one or more of the following, non-exhaustive and non-limiting, list of different feature variables: a reflectivity percentage, a color percentage, a uniformity percentage, an inclusions percentage, an inclusions frequency map, pantone series, a posterized pantone series, a brights percentage, a mid-tones percentage, one or more discrepancies with a previous image data for the same object (or the output(s) generated based on the previous image data), a brush sign frequency map on a subject of the object, a brush sign frequency map on a background of the object, a positive campiture percentage, a composition probability map of an object, a pigment density map, a pigment permeation map, a signature pantone code, a signature tag word, a calligraphic sign vector, and a calligraphic pressure map. Additionally, in other embodiments, the feature variable datasets 226 may include one or more additional feature variables that are not expressly included in the above list of feature variables but that, nevertheless, are inherently disclosed in the variety of different feature variables that are expressly disclosed in the list above. For example, in some embodiments, the one or more feature variable datasets may include a modified version of one or more feature variables, including a combination of one or more of the feature variables listed above.

[0056] Each set in the feature variable datasets 226 can include its one or more feature variables (e.g., the feature variable data, or the feature variables themselves) and associated metadata that identifies at least one object (or its digital image data) associated with that set of feature variables. For example, metadata associated with, or contained in, a feature variable dataset can identify one or more, or all, of: objects used to determine the feature variables corresponding to that set of feature variables; one or more comparison data (or comparison datasets) with, in some examples, one or more corresponding sets of feature variables, and the like.

[0057] The origin profiles 227 can include a plurality of different origin profiles (or origin profile data) and each origin profile corresponds to a single origin of one or more objects (e.g., an origin of one or more of the plurality of additional objects associated with a comparison dataset). Each of the origin profiles 227 can include one or more different pieces of information regarding its corresponding origin. For example, an origin profile can include at least one name associated with the profile’s origin (e.g., one or more given or legal names, one or more stage or pen names, or one or more pseudonyms of the origin). An origin profile can also include one or more periods of time associated with the origin or one or more of the origin’s objects (e.g., an period of time defined by the origin’s lifetime of operation, one or more periods of time defined by the publication date(s) for one or more of the origin’s objects, a period of time associated with one or more of the origin’s objects that are associated with one or more object categories (e.g., one or more object types (gemstone, stamp, coin, jewelry, etc.) one or more origin or source types, printing techniques, fabrication styles, artistic movements or artistic styles). Additionally, an origin profile may include one or more geographic location(s) associated with the origin, including, for example, a geographic source, a geological region, one or more physical conditions, printing techniques, author birthplace, one or more primary residences of an author and associated with one or more of the author’s objects, a geographical region of one or more related objects and/or sources associated with the origin, etc.). In some embodiments, an origin profile may contain one or more known, or previously authenticated, objects associated with the origin or any of the origin’s objects. For example, the origin profile may identify a plurality of objects that substantially influenced the characteristics of, or the style used in, one or more of the origin’s objects (e.g., one or more objects of another origin that was related and/or relevant to the origin). In some embodiments, an origin profile may include one or more characteristics associated with the origin’s object(s) and associated with one or more objects of one or more different origin(s). In some embodiments, the origin profile may include one or more types of information not expressly included in the examples of origin profile information that are described above. For example, in some embodiments, an origin profile may include one or more additional types of information regarding the profile’s origin, including, for example, a modified version of one or more examples of origin profile information described above and may include one or more combinations of two or more of the examples of origin profile information described above.

[0058] The object categories 228 can include one or more different categories of object(s), which may comprise an association between the one or more objects (or one or more of their characteristics) included in that category of objects. For example, the object categories may include one or more categories of objects according to an artistic style or creative movement that is associated with each of the objects (or their characteristics) included in that category. For example, the object categories 228 can include one or more categories of object(s), which include an association of each object (or its characteristics) included in the category of objects. For example, the object categories 228 can include categories for objects associated with one or more of the following non-limiting and non-exhaustive list of example categories: a gemstone category, a physical composition category, an inclusion percentage category, a precious metal category, a collectables category, a tenebrosi category, a baroque category, an impressionist category, a surrealist category, a cubism category, a pop art category, a photorealistic category, and the like. Some embodiments may include one or more additional categories of objects beyond the non-limiting list of example categories above. For example, the categories of object(s) may include a category for an association between a plurality of objects (or their characteristics) based on one or more general criteria, principals, patterns, and the like, that may be substantially present in, or define an association between, each of the objects included in (or associated with) that category.

[0059] The one or more artificial intelligence models 229 can determine an object authenticity score, object origin, object origin score, or object origin authenticity score, of the first object based on at least one or more feature variables of the first object and a comparison dataset.

[0060] The one or more artificial intelligence models 229 can include one or more machine learning models trained to generate an output based on one or more matching portions of the results for a digital image dataset of an object (e.g., one or more feature variables, one or more origin profiles, one or more object categories, or digital image data, etc.) and of a comparison dataset (e.g., one or more corresponding feature variables, origin profile(s), object categories, or digital image data) used to determine an authenticity score, an origin, an origin score, an authenticity result of, or otherwise ascertain an authenticity or origin for, the object captured or otherwise included in the corresponding image data (e.g., the object imaged by one or more of the client devices 250, 252, 254 or otherwise captured in received image data, including first image data, second image data, third image data, fourth image data, etc.). For example, the models 229 can include an artificial intelligence model trained to identify any feature variables that are present in the comparison dataset. In an aspect, the feature variables can be used to determine the provenance of the object. For example, a provenance engine 240 can execute one or more artificial intelligence models to identify and associate objects, or their associated feature variables, origin profiles, etc. The provenance engine 240 may perform one or more of its functions via updates to the comparison dataset used to determine a provenance (e.g., generate a provenance score for) the object in the image data received by the server 210. In an aspect, the feature variable can be utilized by the provenance engine 240 to determine an authenticity of the object. For example, an authenticity engine of the provenance engine 240 can execute one or more artificial intelligence models to identify and associate objects, or their associated feature variables, origin profiles, etc. The authenticity engine may perform one or more of its functions via updates to the comparison dataset used to determine an authenticity (e.g., generate an authenticity score for) the object in the image data received by the server 210. In an aspect, the feature variables can be used by the provenance engine 240 to determine an origin of the object. For example, an origin engine can execute one or more artificial intelligence models to identify and associate objects, or their associated feature variables, origin profiles, etc. The origin engine may perform one or more of its functions via updates to the comparison dataset used to determine an origin of (e.g., generate an origin score for) the object in the image data received by the server 210. In some implementations, the authentication server 210 (or comparison data processor 238) can execute one or more of the artificial intelligence models 229 of program data 220 in response to determining one or more feature variables for a digital image data output by the image data processor 232.

[0061] In some examples, the artificial intelligence models 229 of the authentication server 210 can be, or may include, one or more neural networks. Each of the artificial intelligence models 229 can be a single shot multi-box detector, and can process an entire dataset of the received image data (e.g., digital image data, one or more feature variables, origin profile(s), and the object categories of the object to be authenticated) in one forward pass. Processing the entire dataset of the object (e.g., the object to be authenticated) in one forward pass can improve processing efficiency, and enables the artificial intelligence models of the authentication server 210 to be utilized for object authentication tasks in near real time or with minimal delay (e.g., minimal delay between the time when image data of the object is collected and the authenticity result of the object is provided).

[0062] In some examples, one or more of the artificial intelligence models 229 can incorporate aspects of a deep convolutional neural network (CNN) model, which may include one or more layers that may implement machine-learning functionality for one or more portions of the operations performed by the engines 230. The one or more layers of the models 229 can include, in a non-limiting example, convolutional layers, max-pooling layers, activation layers and fully connected layers, among others. Convolutional layers can extract features from the input image dataset(s) (or input comparison data) of the object using convolution operations. In some examples, the convolutional layers can be followed, for example, by activation functions (e.g., a rectified linear activation unit (ReLU) activation function, exponential linear unit (ELU) activation function, etc.), model. The convolutional layers can be trained to process a hierarchical representation of the input data (e.g., input image data and/or feature variables based on the same), where lower level features are combined to form higher-level features that may be utilized by subsequent layers in the artificial intelligence model(s) 229 or the execution of a corresponding machine learning model (e.g., execution of one or more of the artificial intelligence models 229 by one or more of a feature variable processor 236, a comparison data processor 238, and/or a provenance engine 240).

[0063] The artificial intelligence model(s) may include one or more max-pooling layers, which may down-sample the feature maps produced by the convolutional layers, for example. The max-pooling operation can replace the maximum value of a set of pixels in a feature map with a single value. Max-pooling layers can reduce the dimensionality of data represented in the image data processor 232, the extraction data engine 234, the feature variable engine 236, the comparison data processor 238, the provenance engine 240 and any (or all) of the one or more artificial intelligence models 229. The one or more of the models 229 may include multiple sets of convolutional layers followed by a max-pooling layer, with the max-pooling layer providing its output to the next set of convolutional layers in the artificial intelligence model. The model(s) 229 can include one or more fully connected layers, which may receive the output of one or more max-pooling layers, for example, and generate predictions (e.g., an authenticity score, associated origin profile(s), associated object categories, etc.) as described herein. A fully connected layer may include multiple neurons, which perform a dot product between the input to the layer and a set of trainable weights, followed by an activation function. Each neuron in a fully connected layer can be connected to all neurons or all input data of the previous layer. The activation function can be, for example, a sigmoid activation function that produces class probabilities for each object class for which the artificial intelligence model is trained. The fully connected layers may also predict the bounding box coordinates for each object detected in the input dataset(s) (e.g., in one or more feature variables of the object to authenticate or one or more corresponding portions of the comparison dataset).

[0064] The authentication server 210 may include several engines 230 (e.g., program modules) including, an image data processor 232, an extraction data engine 234, a feature variable engine 236, a comparison data processor 238, and a provenance engine 240.

[0065] The engines 230 may include all or portions of the other elements of the authentication server 210 (e.g., program data 220, the processors 212, etc.). The engines 230 may run multiple operations concurrently or in parallel by or on the one or more processors 212. In some embodiments, portions of the disclosed engines, processors, components, blocks, and/or facilities are embodied as executable instructions embodied in hardware or in firmware, or stored on a non-transitory, machine-readable storage medium, such as the memory 218. The instructions may comprise computer program code that, when executed by a processor and/or computing device, cause a computing system (such as the processors 212 and/or the authentication server 210) to implement certain processing steps, procedures, and/or operations, as disclosed herein (e.g., one or more steps of methods 300 and 400, which are described below with reference to FIGS. 3 and 4, respectively). The modules, components, and/or facilities disclosed herein may be implemented and/or embodied as a driver, a library, an interface, an API, FPGA configuration data, firmware (e.g., stored on an EEPROM), and/or the like. In some embodiments, portions of the engines, processors, components, blocks, and/or facilities disclosed herein are embodied as machine components, such as general and/or application-specific devices, including, but not limited to: circuits, integrated circuits, processing components, interface components, hardware controller(s), storage controller(s), programmable hardware, FPGAs, ASICs, and/or the like. Accordingly, the engines and processors disclosed herein may be referred to as controllers, layers, services, modules, blocks, facilities, drivers, circuits, and/or the like.

[0066] The image data processor 232 can receive and process the digital image data collected by a client device 250, 252, 254 and received by the authentication server 210 via the network 201. The image data processor 232 may identify an object associated with each set of digital image data that the server 210 receives or stores (e.g., in program data 220 and/or memory 218) to determine the authenticity of (e.g., authenticity score(s) or other authenticity result(s) of) one or more object(s). Accordingly, in some embodiments, the image data processor 232 may identify one or more sets of image data associated with the same object (e.g., separate sets of image data received to generate multiple authenticity scores for the same object).

[0067] In some embodiments, the image data processor 232 may verify whether a received digital image dataset adheres to one or more mandatory criteria (e.g., minimum specifications) for the acquisition of acceptable image data of an object. For example, the image data processor 232 may verify whether a received digital image dataset comprises digital image data in one or more file formats (e.g., a raw digital image file or the like), whether the digital image data (or the imaging device used to collect it) satisfies one or more minimum technical specifications (e.g., a minimum image resolution, a minimum digital image sensor size, etc.), and/or whether the digital image data has been collected according to specified imaging criteria (e.g., whether images are properly framed, whether image data was collected at proper distance(s), etc.). For example, the image data processor 232 may verify whether an image dataset was collected at a specified first distance by calculating an expected pixels per inch of the image dataset and determining an actual pixels per inch of the image dataset and comparing the expected pixels per inch with the actual pixels per inch.

[0068] For example, in some embodiments, the image data processor 232 may determine an expected pixels per inch (e.g., at a first distance) for one or more digital images of the image dataset based on a digital image output resolution for the digital imaging device used to collect the image dataset and an expected (e.g., required) first distance. Additionally, in some embodiments, the image data processor 232 may determine an actual pixels per inch (e.g., at the first distance) for the one or more digital images of the image dataset based on the actual digital resolution of the one or more digital images and one or more physical dimensions of the object depicted in the image dataset. Accordingly, in some embodiments, the image data processor 232 may determine an actual pixels per inch of one or more digital images based on the actual resolution of the one or more images and based on the height or length of the object (e.g., a side length of a flat (substantially two-dimensional) painting (canvas) or a known length of a three-dimensional object or sculpture).

[0069] The extraction engine 234 can extract or otherwise generate one or more separate imagery datasets from the image data received for an object (e.g., output by the image data processor 232). The extraction engine 234 may generate each of the one or more separate imagery datasets to include a specified type, or types, of digital images of the object. For example, the extraction engine 234 can generate a color imagery dataset comprising that portion of the image data pertinent to an analysis of one or more colors present in the object (e.g., embodiments of a color imagery dataset may exclude digital images that depict only the backside/rear canvas of an object). As another example, the extraction engine 232 can generate a volumetric image dataset comprised of that portion of the image data that is associated with, or depicting a portion of, the volumetric features of the object. Similarly, in yet another example, the extraction engine 232 may generate a calligraphic imagery dataset using that portion of the digital image data that captures at least a portion of the calligraphy data that may be present in the image data of the object to be authenticated. In some embodiments, the extraction engine 234 may generate each of the one or more separate imagery datasets to include only a specified type, or types, of digital images of the object.

[0070] The extraction engine 234 can generate one or more extraction datasets from the image data of an object (e.g., the image data received by or output from the image data processor 232) or, alternatively, from one or more imagery datasets generated, by the extraction engine 234, based on the image data of the object. In some embodiments, the extraction engine may generate one or more different types of extraction datasets, including, for example, one or more color (e.g., a highlights, mid-tones, and blacks distribution, a chromatic spectrum, a chromatic hatching, and a chromatic volumes) extraction datasets, one or more volumetric (e.g., craquelure patterns, craquelure details, brush patterns, canvas details, canvas weave pattern, backside/support detail) extraction datasets (e.g., the volumetric extraction dataset 510 and the brushstroke extraction dataset 512, shown in FIG. 5), and one or more calligraphic (e.g., signature detail(s), calligraphic brush pattern(s), backside pattern) extraction datasets.

[0071] Alternatively, or in addition, the extraction engine 234 may generate one or more extraction datasets that include, for example, one or more extraction datasets for one or more of: palette data, mid-tones data, pattern data, campiture data, pigment density data, signature material data, signature color data, signature evaluation data, and evaluation of pressure points data.

[0072] For example, the extraction engine 234 may generate one or more color extraction datasets based on one or more digital images of a color image dataset, which the extraction engine 234 generated from a subset of the digital images included in the image data received for an object (e.g., digital image data, one or more digital image data packs, output from the image data processor 232 or received, via network 201, from one of the client devices 250, 252, 254). As another example, the extraction engine 234 may extract a calligraphic, or signature, extraction dataset (e.g., calligraphic extraction dataset 710 shown in FIG. 7) from a calligraphic imagery dataset that only includes at least one digital image of the backside of the object (e.g., backside image data 602, backside support image data 604, and canvas extraction dataset 610, each shown in FIG. 6), and at least one digital image of any calligraphy (e.g., any origin signature(s) or any other stylized writing) that is present in the object.

[0073] The feature variable engine 236 can determine one or more feature variables of an object based on one or more of the received image data (of the object), a plurality of imagery datasets generated by the extraction engine 234 based on the received image data, or one or more extraction datasets generated by the extraction engine 234 (e.g., as described in greater detail above). The one or more feature variables determined by the feature variable engine 236 may include, for example, one or more of: a pantone series, a posterized pantone series, a brights percentage, a mid-tones percentage, discrepancies with previous image data (or output(s) based on the previous image data) of the same object, a brush sign frequency map on a subject of the object, a brush sign frequency map on a background of the object, a positive campiture percentage, a probability map for a composition of the object, a pigment density and permeation map, a signature pantone code, a signature tag word, a calligraphic sign vector, and a calligraphic pressure map.

[0074] The comparison data processor 238 can process one or more portions of the comparison data 222, including to identify one or more comparison datasets to use in generating an origin, an origin score, an authenticity estimation, an authenticity score, or any other authenticity result, for an object. For example, the comparison data processor 238 may identify a comparison dataset based on one or more similarities between the comparison dataset and the object for which an origin may be determined or for which an origin score or authenticity score will be generated. More specifically, the comparison data processor 238 may identify a comparison dataset from a plurality of additional objects that were created within the same geographic region, or during substantially the same time period, as the object to be authenticated.

[0075] Alternatively, or in addition, the comparison data processor 238 may identify a comparison dataset based on one or more object categories associated with, or one or more additional objects that include, one or more feature variables that are substantially similar to (e.g., nearly identical or having only difference(s) below a specified threshold) the one or more feature variables determined for the object being authenticated. Alternatively, in some embodiments, the comparison data processor 238 may identify a comparison dataset from a plurality of objects that includes only a minor subset (e.g., a small minority) of objects that are substantially similar to the object being authenticated. The comparison data processor 238 may determine a number of additional objects to include in a comparison dataset based, in part, on the number of substantially similar objects that it will include and the extent of any similarities (e.g., a match percentage) between the plurality of additional objects of a comparison dataset and with the object to be authenticated.

[0076] Additionally, the comparison data processor 238 may modify (e.g., update) one or more portions of the comparison data 222 according to a modified comparison dataset. The modified comparison dataset may be determined from, or indicated by, one or more of the output(s) generated by one or more components of the server 210 (e.g., the extraction data engine 234, the feature variable engine 236, and the provenance engine 240). For example, the provenance engine 240 and the feature variable engine 236 may output, via one or more machine learning models used to generate a provenance score, a modified metadata for the comparison dataset used to generate a new provenance score, which identifies a modified set of associated objects and/or aspects of the same (e.g., origin profile(s), object categories, etc.). As another example, the provenance engine 240 and the feature variable engine 236 may output, via one or more machine learning models used to generate an authenticity score, a modified metadata for the comparison dataset used to generate a new authenticity score, which identifies a modified set of associated objects and/or aspects of the same (e.g., origin profile(s), object categories, etc.). As another example, the provenance engine 240 and the feature variable engine 236 may output, via one or more machine learning models used to generate an origin score, a modified metadata for the comparison dataset used to generate a new origin score, which identifies a modified set of associated objects and/or aspects of the same (e.g., origin profile(s), object categories, etc.).

[0077] The comparison data processor 238 can modify a portion of the comparison data 222 based on the output(s) generated by the one or more artificial intelligence models 229 (e.g., a modified comparison dataset indicated by the output(s) generated by one or more machine learning models implemented by the provenance engine 240. For example, the comparison data processor 238 may modify a portion of the comparison data 222 (e.g., a first comparison dataset) according to a determination to add a new object to a set of objects associated with one or more of the following: one or more characteristics of an object, one or more origin profile(s) (e.g., one or more origin profiles for origin(s) that taught, criticized, or otherwise influenced, the origin(s) of an associated object), one or more object categories (e.g., a “footwear” category, an “accessory” category, a “handbag” category, etc.), one or more geographic region(s) (e.g., a geographic region where an object was created or otherwise associated with an object), and one or more time period(s) (e.g., time period defined by one or more origin(s), a time period defined by the creation of one or more objects, etc.). For example, the comparison data processor 238 can determine one or more portions of the comparison data 222 associated with one or more characteristics to include in a comparison dataset used to generate an origin, origin score, and/or authenticity score of an object, (e.g., object 110).

[0078] The provenance engine 240 may generate a provenance score (or other provenance indication) for the object of the image data received by the authentication server (e.g., from one of the client devices 250, 252, 254) for the object. The provenance engine 240 may include one or more artificial intelligence models 229 that are trained to generate one or more output(s) based on one or more input(s). The one or more outputs may be generated based, for example, at least in part on a comparison of the determined set of feature variables and the comparison dataset identified by the comparison data processor 238. The provenance engine 240 may execute the one or more artificial intelligence models 229 to generate an output indicative of a provenance score for the object (i.e., the object of the determined set of feature variables) and one or more outputs indicative of change(s) to the comparison dataset, which reflect the new comparison data generated from the authentication of the first object. The provenance engine 240 may execute a first artificial intelligence model trained to generate an output indicative of a provenance score for the first object (e.g., the object to be authenticated), based on inputs that include at least the determined set of feature variables (of the object to be authenticated) and the comparison dataset output by the comparison data processor 238. For example, the first artificial intelligence model may be trained to generate the output indicative of the provenance score based on a determined match percentage for the determined set of feature variables and one or more corresponding sets of feature variables (e.g., one or more sets of feature variables that exhibit a matching percentage (e.g., a percentage of matching feature variables) that is directly correlated with (e.g., proportional to) the probable provenance of, and thus the provenance score generated for, the object being authenticated by system 200.

[0079] In some embodiments, the provenance engine 240 can partition, into two or more subsets, each of the following that correspond to an object to be authenticated: one or more feature variables, a plurality of different image data packs, one or more datasets extracted from an image data pack, and the received digital image data for that object. For example, the provenance engine 240 can partition, for an object to be authenticated, each of the information listed above into two or more subsets associated with different potential sources. The provenance engine 240 may, for example, use the two or more subsets associated with different sources for a comparison of the information for an object against itself, which may be part of the process to generate the provenance score of that object. In some embodiments, the provenance engine 240 (or authentication server 210) can partition the information above into at least one subset associated with a source and one or more subsets not associated with any source, or for which no source can be determined.

[0080] In some embodiments, the provenance engine 240 (or the processors of the authentication server 210) can determine a consistency metric of the two or more subsets based on a comparison of one or more portions of two or more corresponding datasets in each of the different subsets. The consistency metric may be determined with a comparison of two or more entire subsets (e.g., using corresponding values or metrics that are representative of, or based on, the contents of the corresponding subset). Alternatively, or in addition, the consistency metric of two or more subsets may be determined by a piecewise comparison using the corresponding individual types of data contained in the two or more subsets (e.g., comparing, between the two or more subsets, each of the following: feature variables, digital image data packs, datasets extracted from digital image data packs, etc.). In some embodiments, the consistency metric of two or more subsets may be based on a variance (e.g., variance data or vector) between the two or more subsets (or, for a piecewise comparison, their individual contents).

[0081] In some embodiments, the provenance engine 240 (or the processors of the authentication server 210) can determine an internal consistency metric for a single subset (e.g., of the two or more subsets partitioned by the provenance server 240, as described above) based on a comparison of one or more portions of data in the different datasets (e.g., feature variables, digital image data, digital image data packs, etc.) within that same subset. For example, the provenance engine 240 may determine an internal consistency metric based on a variance of one or more types of datasets contained in that subset (e.g., a variance of the feature variables, a variance of the digital image packs, etc.). Alternatively, or in addition, the internal consistency metric for a single subset may be determined based on a comparison with an expected variance between two different types of datasets of the subset and an actual, or determined, variance between those same two types of datasets of the subset (e.g., a comparison of an expected variance between a feature variable and a digital image data pack with an actual variance that is determined for that feature variable and digital image data pack within the same subset).

[0082] In some embodiments, the provenance engine 240 can determine, based on a comparison of the consistency metric of the two or more subsets and the internal consistency metric of a single subset, a confidence score for one or more of the datasets (e.g., one or more feature variables, image data packs, etc.) associated with the first object (or its digital image data). For example, in some embodiments, the provenance engine 240 can determine a confidence score based on whether either (or both) of the consistency metric of the two or more subsets and the internal consistency metric are less than a threshold. Alternatively, or in addition, the provenance engine 240 may determine a confidence score based on a determination that the difference of two consistency metrics does, or does not, exceed a threshold. In some embodiments, the provenance engine 240 can determine a confidence score based on the difference between an expected mathematical relation of the consistency metrics and a corresponding actual, or computed, mathematical relation of the consistency metrics.

[0083] In an aspect, the provenance engine 240 may comprise or include an authenticity engine that may generate an authenticity score (or other authenticity indication) for the object of the image data received by the authentication server (e.g., from one of the client devices 250, 252, 254) for the object. The authenticity engine may include one or more artificial intelligence models 229 that are trained to generate one or more output(s) based on one or more input(s). The one or more outputs may be generated based, for example, at least in part on a comparison of the determined set of feature variables and the comparison dataset identified by the comparison data processor 238. The authenticity engine may execute the one or more artificial intelligence models 229 to generate an output indicative of an authenticity score for the object (i.e., the object of the determined set of feature variables) and one or more outputs indicative of change(s) to the comparison dataset, which reflect the new comparison data generated from the authentication of the first object. The authenticity engine may execute a first artificial intelligence model trained to generate an output indicative of an authenticity score for the first object (e.g., the object to be authenticated), based on inputs that include at least the determined set of feature variables (of the object to be authenticated) and the comparison dataset output by the comparison data processor 238. For example, the first artificial intelligence model may be trained to generate the output indicative of the authenticity score based on a determined match percentage for the determined set of feature variables and one or more corresponding sets of feature variables (e.g., one or more sets of feature variables that exhibit a matching percentage (e.g., a percentage of matching feature variables) that is directly correlated with (e.g., proportional to) the probable authenticity of, and thus the authenticity score generated for, the object being authenticated by the system 200.

[0084] In some embodiments, the authenticity engine can partition, into two or more subsets, each of the following that correspond to an object to be authenticated: one or more feature variables, a plurality of different image data packs, one or more datasets extracted from an image data pack, and the received digital image data for that object. For example, the authenticity engine can partition, for an object to be authenticated, each of the information listed above into two or more subsets associated with different potential sources. The authenticity engine may, for example, use the two or more subsets associated with different sources for a comparison of the information for an object against itself, which may be part of the process to generate the authenticity score of that object. In some embodiments, the authenticity engine can partition the information above into at least one subset associated with a source and one or more subsets not associated with any source, or for which no source can be determined.

[0085] In some embodiments, the authenticity engine can determine a consistency metric of the two or more subsets based on a comparison of one or more portions of two or more corresponding datasets in each of the different subsets. The consistency metric may be determined with a comparison of two or more entire subsets (e.g., using corresponding values or metrics that are representative of, or based on, the contents of the corresponding subset). Alternatively, or in addition, the consistency metric of two or more subsets may be determined by a piecewise comparison using the corresponding individual types of data contained in the two or more subsets (e.g., comparing, between the two or more subsets, each of the following: feature variables, digital image data packs, datasets extracted from digital image data packs, etc.). In some embodiments, the consistency metric of two or more subsets may be based on a variance (e.g., variance data or vector) between the two or more subsets (or, for a piecewise comparison, their individual contents).